Bamboo and/or agents fail with "No space left on device" when partition runs out of inodes

Platform notice: Server and Data Center only. This article only applies to Atlassian products on the Server and Data Center platforms.

Support for Server* products ended on February 15th 2024. If you are running a Server product, you can visit the Atlassian Server end of support announcement to review your migration options.

*Except Fisheye and Crucible

Summary

Bamboo and/or agents report no space left on device even though the disk (or partition) has enough free space. The following errors can be found inside the <bamboo-home>/logs/atlassian-bamboo.log and/or <bamboo-agent-home>/atlassian-bamboo-agent.log files:

2021-03-24 18:35:49,747 ERROR [http-nio-8085-exec-11577] [FiveOhOh] 500 Exception was thrown.

java.io.IOException: No space left on device

at sun.nio.ch.FileDispatcherImpl.force0(Native Method)

at sun.nio.ch.FileDispatcherImpl.force(FileDispatcherImpl.java:76)

at sun.nio.ch.FileChannelImpl.force(FileChannelImpl.java:388)2021-04-15 17:36:42,034 ERROR [http-nio-8085-exec-138] [FiveOhOh] 500 Exception was thrown.

java.nio.file.FileSystemException: /path/to/file: No space left on device

at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107)

at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214)Environment

- Any supported version of Bamboo hosted on Linux.

- A remote agent hosted on Linux.

Diagnosis

The following actions can be taken to diagnose the issue and confirm that you're running into the problem described in this article:

Confirm there's enough disk space available in the partition where the <bamboo-home>, <bamboo-shared-home>, and/or <bamboo-agent-home> folders are located by running the df -h command:

$ df -h Filesystem Size Used Avail Use% Mounted on udev 16G 0 16G 0% /dev tmpfs 3.2G 2.5M 3.2G 1% /run /dev/nvme0n1p2 468G 214G 231G 49% / tmpfs 16G 475M 16G 3% /dev/shm tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 16G 0 16G 0% /sys/fs/cgroup (...)Confirm there's enough disk space available by creating a Bamboo support zip and looking for the <free-disk-space> property inside the application.xml file:

<free-disk-space>291 GB</free-disk-space>Look for the inode utilization on the partition where the <bamboo-home>, <bamboo-shared-home>, and/or <bamboo-agent-home> folders are located by running the df -i command:

$df -i Filesystem Inodes IUsed IFree IUse% Mounted on udev 4070819 700 4070119 1% /dev tmpfs 4078019 1342 4076677 1% /run /dev/nvme0n1p2 31227904 30387693 840211 97% / tmpfs 4078019 559 4077460 1% /dev/shm tmpfs 4078019 5 4078014 1% /run/lock tmpfs 4078019 18 4078001 1% /sys/fs/cgroup /dev/loop3 10817 10817 0 100% /snap/core18/1988 /dev/loop2 10790 10790 0 100% /snap/core18/1997 /dev/loop4 19362 19362 0 100% /snap/dbeaver-ce/110 /dev/loop5 27807 27807 0 100% /snap/gnome-3-28-1804/145 /dev/loop6 11713 11713 0 100% /snap/core20/904 /dev/loop7 3156 3156 0 100% /snap/remmina/4802 /dev/nvme0n1p1 0 0 0 - /boot/efiA high percentage in the column "IUse%" (in the partition where Bamboo was performing I/O activity) will indicate inode exhaustion. In this example, the problematic partition is /dev/nvme0n1p2.

Cause

You're running out of inodes on the disk/partition where the <bamboo-home>, <bamboo-shared-home>, and/or <bamboo-agent-home> folders are located. There are too many files in the partition so when Bamboo tries to create new ones, even though there is enough disk space, there's no data structure to point to it. A good and detailed explanation on what inodes are can be found in this external link:

Solution

Solution 1

The number of inodes is set when Linux creates the partition. The easiest way to solve this problem is to delete or move files outside the partition. You can leverage the following command to count files per folder:

$ cd /path/to/folder/to/investigate

$ sudo find . -xdev -type f | cut -d "/" -f 2 | sort | uniq -c | sort -n Once enough files have been deleted or moved outside this partition, Linux will need to be restarted to ensure inodes are once again available. Running df -i again will help verify whether enough inodes are available after the restart.

Solution 2 (agent only)

This solution only applies to the following scenarios:

- Agents running out of disk space due to an extremely large <bamboo-agent-home>/xml-data folder.

- Bamboo Server and/or Data Center running out of disk space when using local agents due to an extremely large <bamboo-agent-home>/xml-data folder.

This solution does NOT apply to the following scenario:

- Bamboo Server and/or Data Center running out of disk space but no local agents in use, only remote and/or elastic agents.

If the largest number of files is coming from the <bamboo-agent-home>/xml-data folder (you can check this using the find command mentioned above) you can choose to enable the following option inside your plans. This won't immediately solve the problem (since Bamboo will only clear the directory after new builds have been run) but it will help reduce and maintain the size of the xml-data folder to an acceptable level.

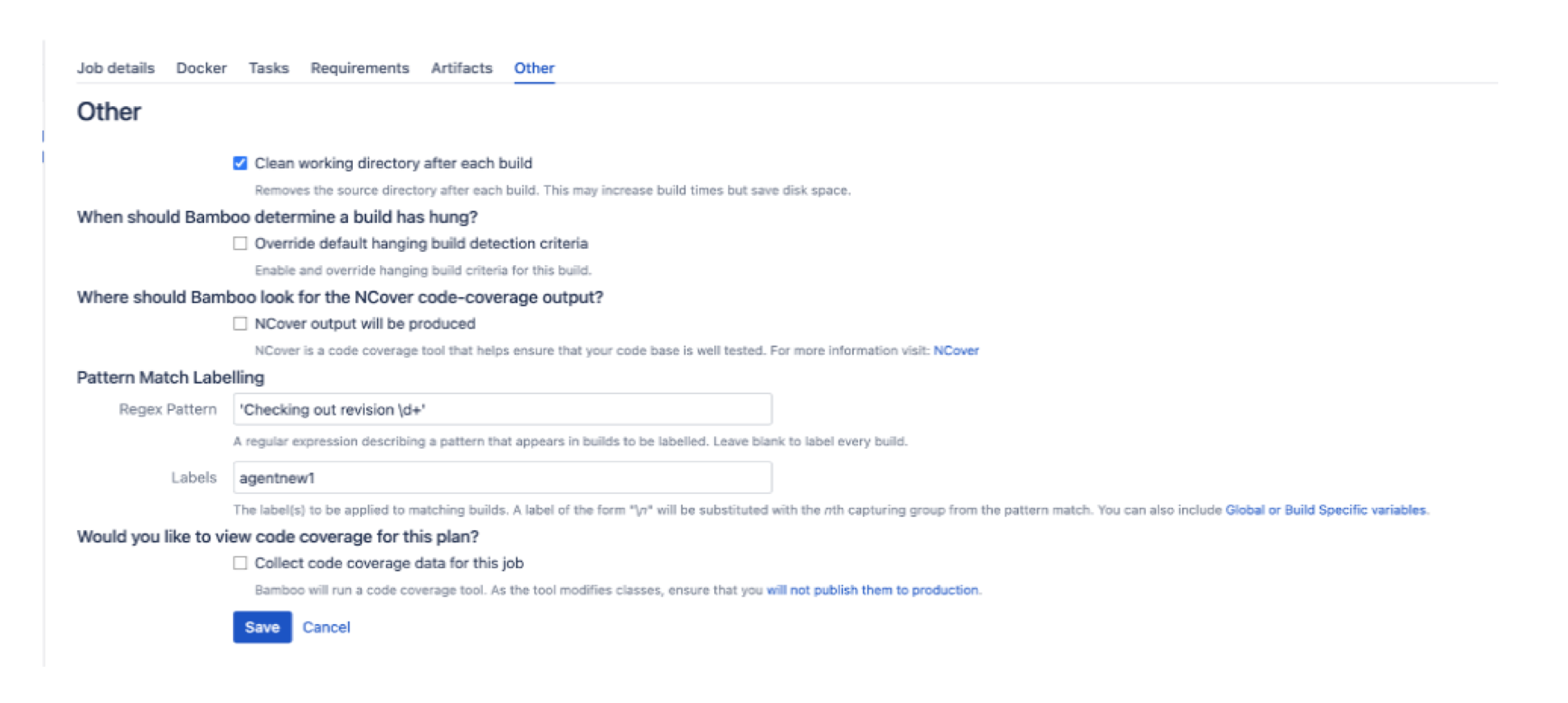

- Enable the Clean working directory after each build option from the job configuration to ensure that no files are left on the build working directory after the job is complete.