Performance and scale testing

With every Jira release, we’re publishing a performance and scaling report that compares the performance of the current Jira version with the previous one. The report contains results of how various data dimensions (number of custom fields, issues, projects, and so on) affect Jira. You can check which of these data dimensions should be limited to have the best results when scaling Jira.

This report is for Jira 9.14 . If you’re looking for other reports, select another version in the upper-right corner of the screen.

Skip to

Introduction

When some Jira administrators think about how to scale Jira, they often focus on the number of issues a single Jira instance can hold. However, the number of issues isn’t the only factor that determines the scale of a Jira instance. To understand how a large instance may perform, you need to consider multiple factors.

This page explains how Jira performs across different versions and configurations. So whether you are a new Jira evaluator who wants to understand how Jira can scale to your growing needs or you're a seasoned Jira admin who’s interested in taking Jira to the next level, this page is here to help.

There are two main approaches, which can be used in combination to scale Jira across your entire organization:

- Scale a single Jira instance

- Use Jira Data Center in a clustered multi-node configuration

Here, we'll explore techniques to get the most out of Jira that are common to both approaches. For additional information on Jira Data Center and how it can improve performance under concurrent load, please refer to our Jira Data Center page.

Determining the scale of a single Jira instance

Jira's flexibility causes significant diversity in our customer's configurations. Analytics data shows that nearly every customer data set displays a unique characteristic. Different Jira instances grow in different proportions of each data dimension. Frequently, a few dimensions become significantly bigger than the others. In one case, the issue count may grow rapidly, while the project count remains constant. In another case, the custom field count may be huge, while the issue count remains small.

Many organizations have their own unique processes and needs. Jira's ability to support these various use cases explains the diversity of the data set. However, each data dimension can influence Jira's speed. This influence is often neither constant nor linear.

To provide an optimal experience and avoid performance degradation, it’s important to understand how specific data dimensions impact speed.

There are multiple factors that may affect Jira's performance in your organization. These factors fall into the following categories (in no particular order):

- Data size, meaning the number of:

- issues

- comments

- attachments

- projects

- project attributes (such as custom fields, issue types, and schemes)

- registered users and groups

- boards

- issues on any given board (in the case of Jira Software)

- Usage patterns, meaning the number or volume of:

- users concurrently logged into Jira

- concurrent operations

- email notifications

- Configuration, meaning the number of:

- plugins (some of which may have their own memory requirements)

- workflow step executions (such as transitions and post functions)

- jobs and scheduled services

- Deployment environment:

- The Jira version used

- The server Jira runs on

- The database used and connectivity to the database

- The operating system, including its file system

- JVM configuration

The following sections will show you how Jira's speed can be influenced by the size and characteristics of data stored in the database.

Testing methodology

Let’s start with a description of the testing methodology we followed in the performance tests and the hardware specifications of the testing environment we used.

Test data set

Before we started the test, we needed to determine what size and shape of the data set represents a typical large Jira instance.

To achieve that, we collected analytics data to get an idea of our customers' environments and what difficulties they face when scaling Jira in large organizations. Then, we rounded the values of the 999th permille of each data dimension included in the tests and used an internal data generation solution to generate a random test data set.

To bring performance test results closer to the real-world scenarios encountered by our customers, we’ve switched from Data Generator for Jira to a new, internal only, and more actively maintained data generation tool.

The following table lists the exact number of elements in each data dimension.

| Data dimension | Value |

|---|---|

Agile boards | 1450 |

Attachments | 660,00 |

Comments | 2,900,000 |

Custom fields | 1400 |

Groups | 22,500 |

Issues | 1,000,000 |

Permissions | 200 |

Projects | 1500 |

Security levels | 170 |

Users | 100,000 |

Workflows | 1500 |

Actions performed

The following table provides the proportions of actions included in the scenario for our testing persona, representing the percentage of times each action is performed during the test. By “action”, we mean a complete operation like opening an issue, adding a comment, or viewing the backlog in a browser window.

Action | Min. | Max. |

|---|---|---|

Add Comment | 1.270% | 1.284% |

Browse Boards | 1.382% | 1.398% |

Browse Projects | 3.371% | 3.403% |

Create Issue | 3.129% | 3.159% |

Edit Issue | 3.325% | 3.375% |

Log In | 0.298% | 0.329% |

Project Summary | 3.139% | 3.159% |

Search with JQL | 13.668% | 13.750% |

Switch issue nav view | 13.680% | 13.769% |

View Backlog | 5.821% | 5.913% |

View Board | 5.828% | 5.915% |

View Dashboard | 6.967% | 7.026% |

View History Tab | 1.309% | 1.332% |

View Issue | 36.376% | 36.578% |

Test environment

The performance tests were all run on a set of EC2 instances, deployed in the eu-west-1 region. For each test, the entire environment was reset and rebuilt.

To run the tests, we used 20 scripted browsers and measured the time taken to perform the actions. Each browser was scripted to perform a random action from a predefined list of actions and immediately move on to the next action (that is, zero think time). Please note that it resulted in each browser performing substantially more tasks than would be possible by a real user and you should not equate the number of browsers to represent the number of real-world concurrent users.

We ran each test was run for 20 minutes and after that, statistics were collected.

The Jira Server environment consisted of:

- One Jira node

- Database on a separate node

- Load generator on a separate node

The Jira Data Center environment consisted of:

- Two Jira nodes

- Database on a separate node

- Load generator on a separate node

- Shared home directory on a separate node

- Load balancer (Apache Load Balancer running on EC2)

Below is a brief summary of the hardware specifications of each EC2 instance we used to run performance tests on Jira Server and Jira Data Center:

Jira | |||

|---|---|---|---|

Hardware | Software | ||

EC2 type | c5d.9xlarge (EC2 types) Jira Server: 1 node Jira Data Center: 2 nodes | Operating system | Ubuntu 20.04 LTS |

CPU type | 3.0 GHz Intel Xeon Platinum 8000-series | Java platform | Java 1.8.0 |

CPU core count | 36 | Java options | 16 GB heap size |

Memory | 72 GB | ||

Disk | 900 GB NVMe SSD | ||

Database | |||

|---|---|---|---|

Hardware | Software | ||

EC2 type | c5d.9xlarge ( EC2 types ) | Operating system | Ubuntu 20.04 LTS |

CPU type | Intel Xeon E5-2666 v3 (Haswell) | Database | MySQL 5.7.32 |

CPU core count | 36 | ||

Memory | 72 GB | ||

Disk | EBS 100 GB gp2 | ||

Load generator | |||

|---|---|---|---|

Hardware | Software | ||

EC2 type | c5d.9xlarge (EC2 types) | Operating system | Ubuntu 20.04 LTS |

CPU type | Intel Xeon E5-2666 v3 (Haswell) | Java platform | Java JDK 8u162 |

CPU core count | 36 | Additional software | Google Chrome (latest stable) Chromedriver (latest stable) WebDriver 3.141.59 |

Memory | 72 GB | ||

Disk | EBS 30 GB gp2 | ||

Results

In this section, we present the results of all the scalability tests we performed to investigate the relative impact of various configuration values. This is the process we followed:

As a reference for the test, we used a Jira instance containing the baseline test data set outlined previously and ran the full performance test cycle on it.

To focus on data dimensions and their effect on performance, instead of testing individual actions, we calculated a mean of all actions from the performance tests.

Next, we doubled each attribute in the baseline data set and ran independent performance tests for each doubled value while leaving all the other attributes in the baseline data set unchanged. That is, we ran the test with a doubled number of issues or a doubled number of custom fields. To reduce noise, we repeated the tests until the results reached a state of convergence. In other words, a state in which the difference in the aggregated mean response time for each data dimension didn’t exceed 10 ms with each subsequent test run.

Then, we compared the response times from the doubled data set test cycles with the reference results. With this approach, we could isolate and observe how the growing size of individual Jira configuration items affects the speed of an already large Jira instance.

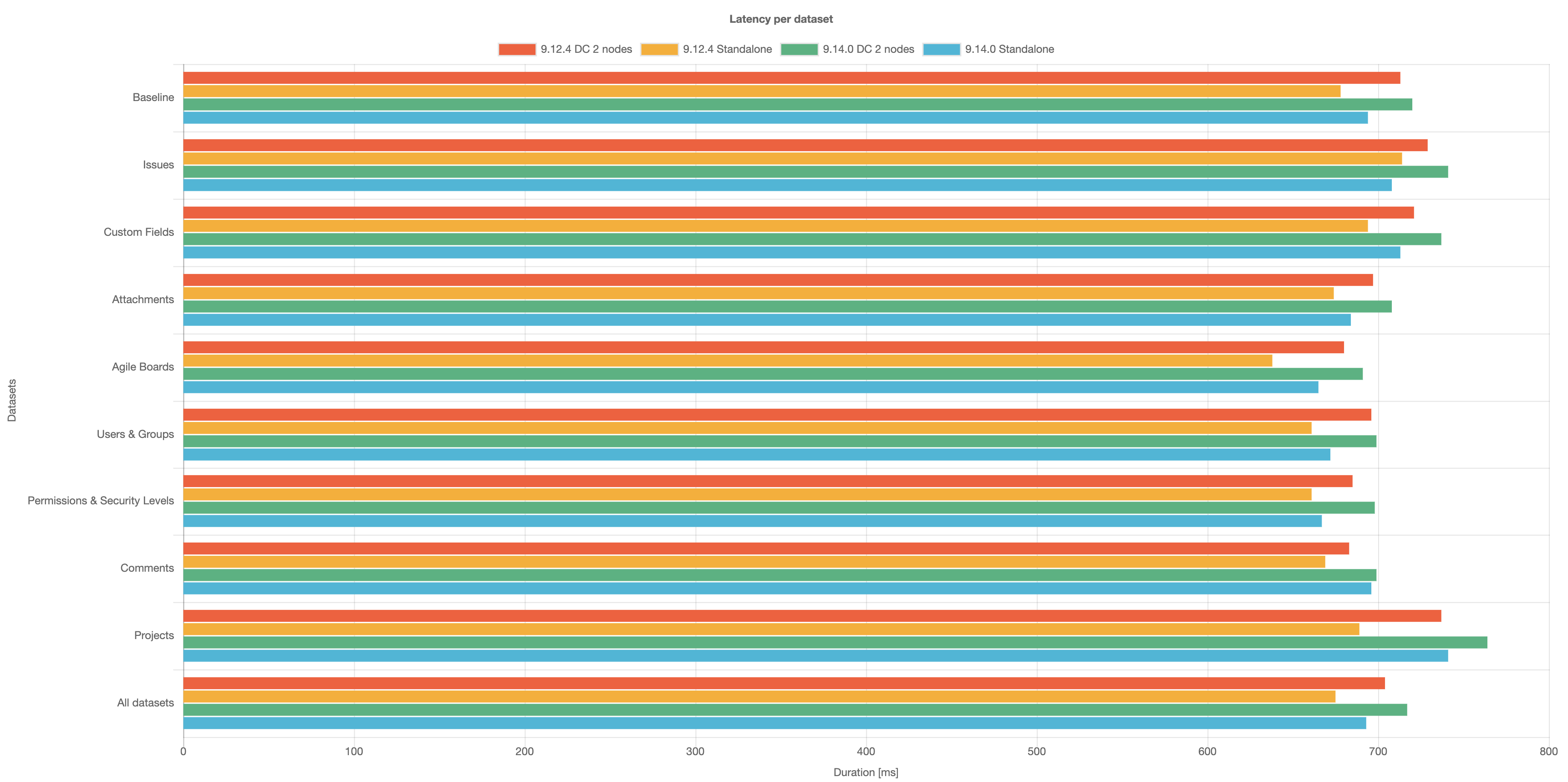

Finally, here are the response times per data set. To give you a bigger picture view of how Jira’s responsiveness has changed over time, we’re publishing a comparison between Jira 9.14.0 and Jira 9.12.4.

Comparison of response times per data set between Jira 9.14.0 and Jira 9.12.4

The exact response time values from the chart above are listed in the following tables.

Jira Data Center 9.14.0 (1 node) vs. Jira Server 9.12.4

All response times in ms.

Data dimension | Response time (Jira 9.12.4) | Response time (Jira 9.14.0) |

|---|---|---|

Baseline | 678 | 694 |

Agile boards | 638 | 665 |

Custom fields | 694 | 713 |

Issues | 714 | 708 |

Attachments | 674 | 684 |

Projects | 689 | 741 |

Permissions & security levels | 661 | 667 |

Users & groups | 661 | 672 |

Comments | 669 | 696 |

All datasets | 675 | 693 |

Jira Data Center 9.14.0 vs. Jira Data Center 9.12.4 (2 nodes)

All response times in ms.

Data dimension | Response time (Jira 9.12.4) | Response time (Jira 9.14.0) |

|---|---|---|

Baseline | 713 | 720 |

Agile boards | 680 | 691 |

Custom fields | 721 | 737 |

Issues | 729 | 741 |

Attachments | 697 | 708 |

Projects | 737 | 764 |

Permissions & security levels | 685 | 698 |

Users & groups | 696 | 699 |

Comments | 683 | 699 |

All datasets | 704 | 717 |

Further resources

If you'd like to learn more about scaling and optimizing Jira, here are a few additional resources that you can refer to.

Archiving issues

The number of issues affects Jira's performance, so you might want to archive issues that are no longer needed. You may also come to conclusion that the massive number of issues clutters the view in Jira, and therefore you still may wish to archive the outdated issues from your instance. See Archiving projects.

User Management

As your Jira user base grows you may want to take a look at the following:

- Connecting Jira to your Directory for authentication, user and group management.

- Connecting to Crowd or Another Jira Server for User Management.

- Allowing Other Applications to Connect to Jira for User Management.

Jira Knowledge Base

For detailed guidelines on specific performance-related topics refer to the Troubleshoot performance issues in Jira server article in the Jira Knowledge Base.

Jira Enterprise Services

For help with scaling Jira in your organization directly from experienced Atlassians, check out our Additional Support Services offering.

Atlassian Experts

The Atlassian Experts in your local area can also help you scale Jira in your own environment.