Resolving pod eviction for kubernetes runner auto scaler due to "ephemeral-storage" usage.

Platform Notice: Cloud - This article applies to Atlassian products on the cloud platform.

Summary

Consider case where users have configured runners on kubernetes autoscaler using the runner autoscaler script. Users may come across various issues during this set up, one of such scenario is pod eviction due to usage of undefined resources while creating runners using auto scalers.

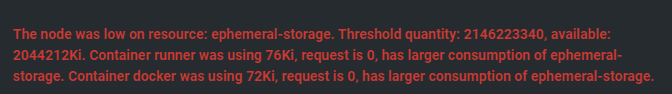

Sample Error:

Solution

In this case, it is recommended to verify any metrics configured about resource spike or messages related to memory pressure, disk pressure, or node issues.

There could be various reasons for pod eviction, one of which could be the below:

- In the above example, pod is evicted due to low ephemeral storage, which also means the node doesn't have enough available disk space to satisfy the storage requirements of the pods running on it.

- In such cases users can edit config map and add additional resources that should help resolving the issue, as in case of low ephemeral storage.

- Also user can tune parameters available at

kustomize/values/runners_config.yaml/config/runners-autoscaler-cm.yamlbased on their requirement

Example:

resources: # This is memory and cpu resources section that you can configure via config map settings file.

requests:

memory: "<%requests_memory%>" # mandatory, don't modify

cpu: "<%requests_cpu%>" # mandatory, don't modify

ephemeral-storage: "1Gi"

limits:

memory: "<%limits_memory%>" # mandatory, don't modify

cpu: "<%limits_cpu%>" # mandatory, don't modify

ephemeral-storage: "2Gi"