Jira Service Management - Troubleshooting why the JSM Mail Handler stopped processing new incoming emails

Platform Notice: Data Center - This article applies to Atlassian products on the Data Center platform.

Note that this knowledge base article was created for the Data Center version of the product. Data Center knowledge base articles for non-Data Center-specific features may also work for Server versions of the product, however they have not been tested. Support for Server* products ended on February 15th 2024. If you are running a Server product, you can visit the Atlassian Server end of support announcement to review your migration options.

*Except Fisheye and Crucible

Summary

Jira Service Management (JSM) projects can be configured to ingest incoming emails and either convert them into new requests, or comments to be added to existing requests. This functionality is handled by the JSM Mail Handler which is configured via the page Project Settings > Email Requests.

In some situations, the JSM Mail Handler functionality might stop working (due to existing bugs or other factors), preventing any new incoming email from being processed by any JSM project. The purpose of this article is to provide:

- a way to troubleshoot most scenarios where the JSM Mail Handler stop processing new incoming email

- the list of the most common root causes and how to identify them

Important note about this article

This article only applies to the JSM Mail Handler functionality, which is configured in JSM projects at the project administration level, via the page Project Settings > Email Requests.

This article does not apply to the Jira Mail Handler functionality which is configured in ⚙ > System > Incoming Mail.

Environment

- Jira Service Management (JSM) Server/Data Center on any version from 4.0.0

- Incoming Emails are fetched and processed using the JSM Mail Handler configured at the project level via the page Project Settings > Email Requests

Explanation around how the JSM Mail Handler works

The JSM Mail Handler consists of 2 different jobs, which are scheduled to run every minute:

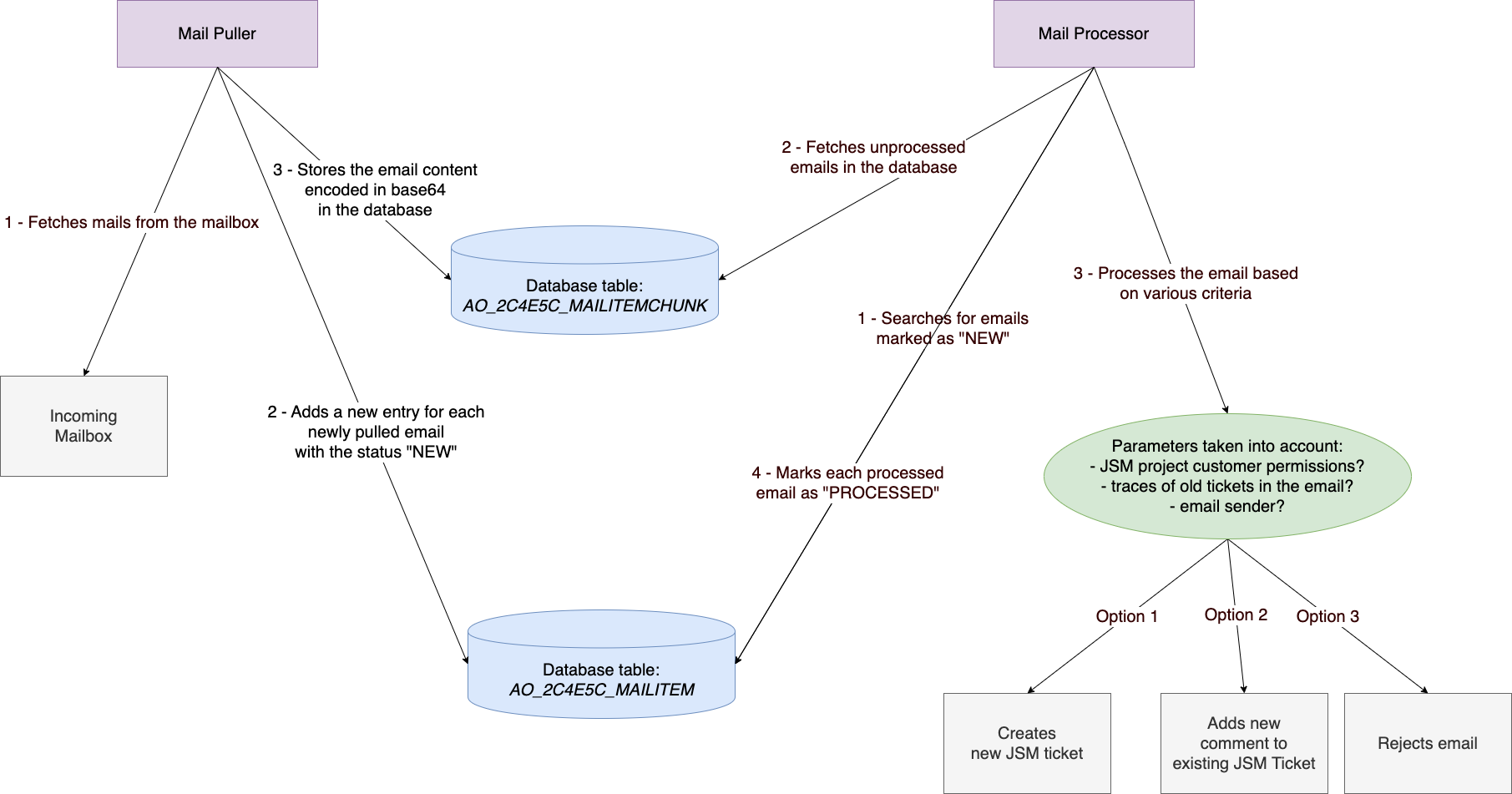

- The Mail Puller Job:

- The purpose of this job is to fetch email from the mailboxes configured in each JSM project (via the page Project Settings > Email Requests)

- It works as described below:

- It connects to each mailbox and looks for UNREAD emails that were received in the mailbox after a certain date (the date when the Mail Handler was configured in the JSM project)

- It downloads each email and encode it in base64

- It marks each email that was fetched from the mailbox(es) as READ

- It stores each base64 encoded email in the Jira Database table AO_2C4E5C_MAILITEMCHUNK

- It adds a new entry to the table AO_2C4E5C_MAILITEM with the status NEW, and which ID refer to the ID of the email that was stored in the table AO_2C4E5C_MAILITEMCHUNK

- The Mail Processor Job:

- The purpose of this Job is to process the encoded emails stored in the Jira Database convert them into new JSM issues or comments (or to reject them if necessary)

- It works as described below:

- It searches for new the ID of new unprocessed emails via the table AO_2C4E5C_MAILITEM

- It fetches the base64 encoded email in the table AO_2C4E5C_MAILITEMCHUNK

- Based on various criteria, it either:

- Rejects the email

- Creates a new JSM request from the email

- Adds a new comment to an existing JSM request

The JSM Mail Handler flow is illustrated in the screenshot below:

It is important to note that:

- Both jobs are jobs scheduled by the Jira Task Scheduler, and are using Caesium threads to be executed (there are 4 Caesium threads available in Jira to run any scheduled job)

- There is only 1 single Mail Puller job and 1 single Mail Process job, and both jobs are responsible for all the JSM Mail Handlers configured in all the JSM projects

- As a result, if any of these 2 job stops running or gets stuck, all the JSM Mail Handlers from all JSM projects will be impacted

Troubleshooting stuck JSM Mail Handler issues

As explained in the previous section, the Mail Puller job and the Mail Processor job are vital for the JSM Mail Handlers to work properly. Therefore, the most common scenarios why the JSM Mail Handlers stop working are the scenarios where:

- Either the Mail Puller job is stuck

- Or the Mail Processor job is stuck

There can be other scenarios where the JSM Mail Handlers will not work properly, for example:

- There is some network/connectivity issue between the Jira application and the mailbox(es)

- There is a clone/test Jira application configured to pull emails from the same mailbox(es) and competing with the Production Jira application to fetch the same emails

Troubleshooting issues where the JSM Mail Handler stops processing new incoming emails can be quite tricky, since there can be various root causes. Therefore, it is good to get yourself familiar with the various tools (logs, SQL queries...) that can help you figure out what is happening.

Let's dig into the tools that can help you troubleshoot such issues.

Checking if there is a clone/test/UAT Jira instance configured with the same Mail Handler

This is not a tool per se, but it is something that you will need to check first.

A very common scenario where the JSM Mail Handlers are no processing new incoming emails is the scenario where a Clone of the Production Jira instance was setup in order to perform some testing. Since the clone version of the Jira application will contain the same JSM Mail Handlers, if this application is running at the same time as the production Jira, the following will happen:

- Both Jira applications will try to fetch the same emails around the same time

- If the Clone Jira application fetches the emails before the production Jira, the emails will be marked as READ in the mailbox(es) before the production Jira instance has a chance to get them

- As a result, the JSM Mail Handlers from the Production Jira application will ignore these emails since they were already marked as READ

Checking the Connectivity logs

Checking the connectivity logs of each JSM Mail Handler can be a good place to start, since these logs are available from the Jira UI and help identify any connection issue between the Jira application and the mail servers.

To access these logs:

- Go to ⚙ > Applications > Jira Service Management > Email Requests

- Click on View Logs next to any JSM Mail Handler

- Open the tab Connectivity logs

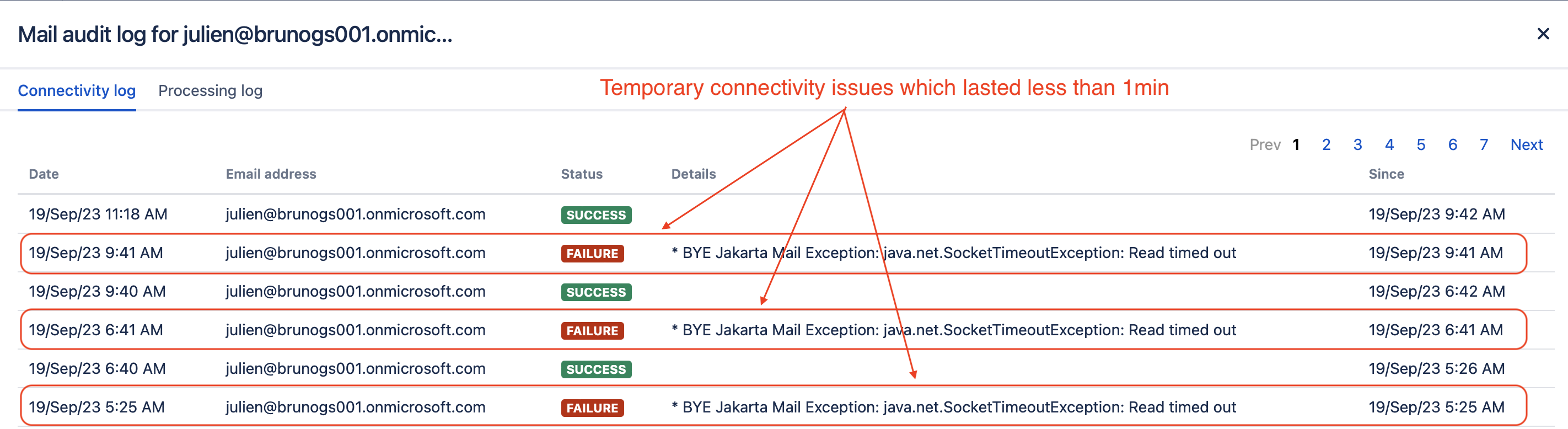

Good to know about these logs: it is common to see very short connectivity issues which last no longer than 1 minute. These issues are usually just temporary glitches (as illustrated in the screenshot below), and can be ignored. However, if you observe connectivity issues which lasted for a long period of time (for example >30min, > several hours), then you might need to focus on the network side of things.

Enabling the JSM Mail Handler DEBUG package

The JSM Mail Handler logs are not enabled by default, and are reset whenever the Jira application is restarted.

To enable the JSM Mail Handler debug package, you need to follow the steps below:

- Go to the page ⚙ > System > Logging and Profiling

- Click on Configure logging level for another package

Use the package below as the package name, and select DEBUG for the Logging Level

com.atlassian.mail.incoming

Once this package is enabled, you should start seeing new lines added every minute to the log file atlassian-jira-incoming-mail.log:

The log sample above is showing an example of "healthy" JSM Mail Handler situation. You can see that:

- the Mail Puller job was triggered as expected (Start running MailPuller ...) and completed (Finish running MailPuller ...)

- the Mail Processor job was triggered as expected (Start running MailProcessor ...) and completed (Finish running MailProcessor ...)

While analyzing these logs, if you see that either Job is never executed, then it's an indication that the job is either stuck, or taking a long time to complete, or that something is wrong with the Jira Task Scheduler and that it stopped scheduling this job.

Checking in the Jira Database if either JSM Mail job is stuck

One way to check if either job is stuck (the Mail Puller or the Mail Processor) is to query the rundetails table in the Jira Database, since it shows the last execution time of any Jira scheduled job and is a great indicator if a job is healthy or not.

SQL Query

The query below can be executed to check the running status of both JSM Mail Handler Jobs:

select * from rundetails where job_id in ('com.atlassian.jira.internal.mail.services.MailPullerJobRunner',

'com.atlassian.jira.internal.mail.services.MailProcessorJobRunner');Analyzing the results

In a healthy environment you should see:

- only 1 row for each job, with "S" as the outcome

- the start time should be a fairly "recent time", meaning just a few minutes before the SQL query was executed

Below is an example of output showing that the 2 jobs are healthy:

|id |job_id |start_time |run_duration|run_outcome|info_message|

|-------|----------------------------------------------------------------|-----------------------------|------------|-----------|------------|

|743,068|com.atlassian.jira.internal.mail.services.MailProcessorJobRunner|2023-09-19 14:39:18.600 +0000|16 |S | |

|743,069|com.atlassian.jira.internal.mail.services.MailPullerJobRunner |2023-09-19 14:39:18.957 +0000|2,708 |S | |Example of "unhealthy job" situation 1

If you see 2 rows for the same job with 1 row that has the run outcome "A" with the message "Already running", then it is an indication that the job might be stuck.

Below is an example showing that the Mail Processor job has been stuck (or running for very long time), since 12:39, and that the last time Jira tried to schedule this job (at 14:39), it canceled its attempt to run the job since it is already running:

|id |job_id |start_time |run_duration|run_outcome|info_message |

|-------|----------------------------------------------------------------|-----------------------------|------------|-----------|-----------------------|

|743,068|com.atlassian.jira.internal.mail.services.MailProcessorJobRunner|2023-09-19 12:39:18.600 +0000|16 |S | |

|743,069|com.atlassian.jira.internal.mail.services.MailProcessorJobRunner|2023-09-19 14:39:18.957 +0000|2,708 |A |Already running |

|743,069|com.atlassian.jira.internal.mail.services.MailPullerJobRunner |2023-09-19 14:39:18.957 +0000|2,708 |S | |Example of "unhealthy job" situation 2

Another unhealthy situation is the situation where one of the mail job is not stuck (it completed its execution successfully), but it has not been triggered by the Jira scheduled in a long time.

For this type of scenario, you should see 1 row for the mail job with the outcome "S", which seems "healthy" at first. However, if you check the "start time" you will see that the last time the job was executed was a long time ago (for example, hours ago), contrary to the other mail job which was recently executed.

Below is an example showing that the Mail Processor Job was scheduled for the last time 1 day ago (Sept 18th in the example below), while the Mail Puller job was recently scheduled (Sept 19th):

|id |job_id |start_time |run_duration|run_outcome|info_message|

|-------|----------------------------------------------------------------|-----------------------------|------------|-----------|------------|

|743,068|com.atlassian.jira.internal.mail.services.MailProcessorJobRunner|2023-09-18 14:39:18.600 +0000|16 |S | |

|743,069|com.atlassian.jira.internal.mail.services.MailPullerJobRunner |2023-09-19 14:39:18.957 +0000|2,708 |S | |Collecting thread dumps

Collecting Thread Dumps while the problem is happening will be very helpful to identify the root cause, in the case where either the Mail Puller or Mail Processor job is stuck.

What you need to look for is the following:

- Look for a long running Caesium thread, which name should be one of the 4 names listed below:

- Caesium-1-1

- Caesium-1-2

- Caesium-1-3

- Caesium-1-4

- Check if the long running Caesium thread contains either method below in the stack trace:

Method that corresponds to the Mail Puller Job

com.atlassian.jira.internal.mail.processor.feature.puller.MailPullerService.run

Method that corresponds to the Mail Process Job

com.atlassian.jira.internal.mail.processor.feature.processor.MailProcessorService.run

Based on the method the Caesium thread is stuck on, you might be able to identify which bug or root cause further down in this article is relevant.

Cause

Root Cause 1 - There is a clone/test Jira instance pulling emails from the same mailbox

Explanation

If there is a test Jira application which a clone of the production Jira application that is up and running, this application will "compete" with the JSM Mail Handler to fetch emails from the same mailboxes. If the Test Jira instance fetches these emails before the Production Jira instance has a chance to fetch these emails, the production Jira will miss these emails, since it will only fetch emails marked as UNREAD.

Diagnosis

To check if this root cause might be relevant:

- check if there is any clone Jira instance that is up and running on a test server

- check if the emails are marked as READ

- try to change the username and password of the mailbox used by the JSM Mail Handler, update it in the production Jira instance and verify if the emails are fetched and processed

Diagnosis

Either stop the test Jira instance, or remove/disable the JSM Mail Handlers configured in this instance.

Root Cause 2 - There is a Jira Core Mail Handler configured in the same Jira application to process emails from the same mailbox

Explanation

There are 2 types of Mail Handlers that come with the JSM application:

- the Jira Mail Handler functionality which is configured in ⚙ > System > Incoming Mail

- This type of Mail Handler is designed to only work with Jira users with application access (users with a license), and is not intended to work with customers (users without a license, who only have access to the Customer Portal)

- the JSM Mail Handler functionality, which is configured in JSM projects at the project administration level, via the page Project Settings > Email Requests

- This type of Mail Handler is especially designed for customer users without a license, who only have access to the Customer Portal

If a Jira application is configured with both types of Mail Handlers configured to pull emails from the same mailbox, the following will happen whenever an email is sent to that mailbox from a customer user (user without a license or the right permission):

- The email will be rejected by the Jira Mail Handler, since the impacted users either don't have a license nor the right permission to access the project configured with the mail handler

- The JSM Mail Handler will miss the email, since the email has already been read by the Jira Mail Handler

- In the end, the email will not be processed at all

Note that for this root cause, the problem will be intermittent, as it depends on which type of Mail Handler accesses the mailbox first (it is random).

Diagnosis

To check if this root cause is relevant:

- Go to the page ⚙ > System > Incoming Mail

- Check if there is a Mail Handler configured to fetch emails from the same mailbox as the JSM Mail Handler configured in the page Project Settings > Email Requests

Solution

Remove the Jira Mail Handler that is fetching emails from the same mailbox as the JSM Mail Handler.

Root Cause 3 - The mail puller job cannot access the mail box due to network issue or authentication issue (basic auth, oauth 2.0...)

Explanation

For various reasons, the JSM might stop being able to connect to the Mailbox. For example:

- the Mail Server is down

- or the Mail Server is unaccessible from the Jira application (due to Network/Proxy/Firewall issue)

- or the username/password used in the JSM Mail Handler configuration is no longer valid

- or in case Oauth 2.0 is used as an authentication method by the JSM, the Oauth 2.0 token or secret key might be invalid/expired

In such case, the JSM Mail Puller will fail to fetch new incoming emails.

Diagnosis

To check if this root cause might be relevant:

- Check if the new emails are marked as UNREAD

- If they are marked as UNREAD, it is an indication that the Mail Puller is unable to connect to the Mailbox to fetch emails

- Check the atlassian-jira-incomin-mail.log files to see if you can find any network/connection related error

If you find the error below, it is an indication that the Mail Puller failed to get an Oauth 2.0 token to connect to the Mail Box because the Secret Key has expired. In this case, the KB article The Jira (or JSM) Mail Handler stops processing new incoming emails due to the error "The provided client secret keys for app XXXXXXXX are expired" is relevant:

2024-08-19 22:54:16,570-0600 Caesium-1-1 WARN ServiceRunner [c.a.j.i.m.p.feature.oauth.MailOAuthServiceImpl] Recoverable exception fetching OAuth token com.atlassian.oauth2.client.api.storage.token.exception.RecoverableTokenException: An error has occurred while refreshing OAuth token ... Caused by: com.atlassian.oauth2.client.api.lib.token.TokenServiceException: AADSTS7000222: The provided client secret keys for app 'XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXX' are expired. Visit the Azure portal to create new keys for your app: https://aka.ms/NewClientSecret, or consider using certificate credentials for added security: https://aka.ms/certCreds. Trace ID: 09ddcb73-9a3b-4440-8d11-74862b588f00 Correlation ID: 7ed16942-749f-4fd7-aed7-2cb122e6d1e6 Timestamp: 2024-08-20 04:54:16Z at com.atlassian.oauth2.client.lib.token.DefaultTokenService.getToken(DefaultTokenService.java:150) at com.atlassian.oauth2.client.lib.token.DefaultTokenService.forceRefresh(DefaultTokenService.java:75) at com.atlassian.oauth2.client.storage.DefaultTokenHandler.refreshToken(DefaultTokenHandler.java:144) ... 46 more

- Go to ⚙ > Applications > Jira Service Management > Email Requests

- Click on View Logs next to any JSM Mail Handler

- Open the tab Connectivity logs

- Check if you can see frequent connection outages

Check if you get the error below which indicates that the Mail Handler failed to get a new Oauth 2.0 token (in case the Mail Handler is using the Oauth 2.0 authentication)

OAuth token not defined for connection. OAuth Authorisation required

Solution

It depends on the actual reason why Jira can't access the mail box:

- Engage your network/security team in case of network/connectivity issue between the Jira application and the Mail Server

- Make sure that the mail server is up and running

- In case the JSM Mail Handler is configured with the Basic Authentication (username/password)

- Make sure that the username/password combination is correct

- Try to reset the password on the Mail Server side, and update it in the JSM Mail Handler configuration in Project Settings > Email Requests

- In case the JSM Mail Handler is configured with the Oauth 2.0 authentication:

- If you saw the error in the incoming mail logs about the expired secret, check the KB article The Jira (or JSM) Mail Handler stops processing new incoming emails due to the error "The provided client secret keys for app XXXXXXXX are expired" as it explains how to fix this issue

- If it does not help or if you did not get the error about the expired token, try to re-authorize the JSM Mail Handler via Project Settings > Email Requests

Root Cause 4 - The mail puller job or the mail processor job is not scheduled anymore due to a temporary database connection outage

Explanation

If the Jira application experiences a temporary database connectivity issue (for example, during a DB server maintenance or re-start), some scheduled jobs such as the Mail Puller or Mail Processor job might never recover from this, and the Jira task scheduler will never try to execute them.

This behavior is caused by the bug JRASERVER-62072 - Database connectivity issue causes scheduled jobs to break

This bug was fixed in JSM 5.11.0.

Diagnosis

To check if this root cause might relevant:

Run the SQL below and check if either job has not been scheduled in a long time (refer to the section Example of "unhealthy job" situation 2 for more information on how to identify it):

select * from rundetails where job_id in ('com.atlassian.jira.internal.mail.services.MailPullerJobRunner', 'com.atlassian.jira.internal.mail.services.MailProcessorJobRunner');Check the Jira application logs and look for an error similar to the errors below, which indicates that Jira failed to execute either the Mail Puller or Mail Processor job due to some temporary DB connectivity issue

2020-12-05 15:07:52,615-0600 Caesium-1-1 ERROR ServiceRunner [c.a.s.caesium.impl.SchedulerQueueWorker] Unhandled exception thrown by job QueuedJob[jobId=com.atlassian.jira.internal.mail.services.MailProcessorJobRunner,deadline=1607202432603] java.lang.reflect.InvocationTargetException ... Caused by: com.atlassian.jira.exception.DataAccessException: org.ofbiz.core.entity.GenericDataSourceException: Unable to establish a connection with the database. (The connection attempt failed.)

Solution

- Short Term fix

- Re-start all the Jira nodes to reset both the Mail Puller and Mail Processor jobs

- Long Term Fix

- Upgrade JSM to 5.11.0 or any higher version

Root Cause 5 - The mail puller job fails to fetch any new emails due to one email which has a subject which exceeds 255 bytes

Explanation

Incoming emails with subjects exceeding 255 bytes will cause the Mail Puller to fail whenever it tries to pull emails from the mailbox, and the Mail Handler will stop processing further incoming mails.

This behavior is tracked in the bug ticket JSDSERVER-7004 - Issue creation via email fails if email subject exceeds 255 bytes causing Mail handler to stop processing

The bug was fixed in the JSM versions 5.9.2, 5.11.0, 5.4.10.

Diagnosis

To check if this root cause might relevant, look into the atlassian-jira-incoming-mail.log files to see if you can spot an error similar to the one below:

2020-09-07 07:44:30,254+0300 ERROR [] Caesium-1-3 ServiceRunner Exception when MailPullerWorker pulls emails:

com.querydsl.core.QueryException: Caught SQLException for insert into "AO_2C4E5C_MAILITEM" ("MAIL_CONNECTION_ID", "STATUS", "CREATED_TIMESTAMP", "UPDATED_TIMESTAMP", "FROM_ADDRESS", "SUBJECT") values (?, ?, ?, ?, ?, ?)

at com.querydsl.sql.DefaultSQLExceptionTranslator.translate(DefaultSQLExceptionTranslator.java:50) [querydsl-4.1.4-provider-plugin-1.0.jar:?]

...

Caused by: java.sql.SQLException: ORA-12899: value too large for column "JIRA"."AO_2C4E5C_MAILITEM"."SUBJECT" (actual: 274, maximum: 255)Solution

- Short Term fix

- Refer to the workarounds provided in the bug ticket

- Long Term Fix

- Upgrade JSM to one of the fixed versions (5.9.2, 5.11.0, 5.4.10)

Root Cause 6 - The mail puller job got stuck due to an infinite timeout used to fetch Oauth 2.0 tokens

Explanation

If a JSM Mail Handler is configured (in Project Settings > Email Requests) using Oauth 2.0 as the authorization method, the Mail Puller will connect to the mail server using an Oauth 2.0. At some point, the Oauth 2.0 will expire and the Mail Puller job will need to fetch a new Oauth 2.0 token from the Mail Server.

Due to a bug, the Mail Puller job might get stuck in an infinite loop while requesting a new Oauth 2.0 token.

The bug is tracked in the ticket JSDSERVER-12620 - The JSM Mail Handlers (channels) stop pulling new emails due to an infinite timeout used to fetch Oauth 2.0 tokens. A fix is available in JSM 10.0.0, 5.12.12, 5.4.25, 5.17.2 and later

The reason behind this bug is the fact that the JSM Mail Puller uses a function from an external library HTTPRequest.java to fetch a new Oauth 2.0 Access token after it expires. By default, this function is not using any timeout value, which means that the timeout will be infinite (the request will never time out if no response is sent back).

Diagnosis

To check if this root cause might relevant:

Collect thread dumps while the issue is happening, and check if you see long running Caesium thread with the following stack trace (take note of the com.atlassian.oauth2.client.lib.token.DefaultTokenService.getToken class):

"Caesium-1-1" daemon prio=5 tid=0x00000000000000eb nid=0 runnable java.lang.Thread.State: RUNNABLE at java.base@11.0.13/java.net.SocketInputStream.socketRead0(Native Method) at java.base@11.0.13/java.net.SocketInputStream.socketRead(Unknown Source) at java.base@11.0.13/java.net.SocketInputStream.read(Unknown Source) ... at com.nimbusds.oauth2.sdk.http.HTTPRequest.send(HTTPRequest.java:899) at com.atlassian.oauth2.client.lib.token.DefaultTokenService.getToken(DefaultTokenService.java:139) at com.atlassian.oauth2.client.lib.token.DefaultTokenService.forceRefresh(DefaultTokenService.java:71) at com.atlassian.oauth2.client.storage.DefaultTokenHandler.refreshToken(DefaultTokenHandler.java:144) at com.atlassian.oauth2.client.storage.DefaultTokenHandler.refreshTokenIfNeeded(DefaultTokenHandler.java:131) ... at com.atlassian.jira.internal.mail.processor.feature.oauth.MailOAuthServiceImpl.getOAuthToken(MailOAuthServiceImpl.java:53) at com.atlassian.jira.internal.mail.processor.feature.authentication.OAuthAuthenticationStrategy.getPassword(OAuthAuthenticationStrategy.java:31) at com.atlassian.jira.internal.mail.processor.feature.puller.MailPullerWorker.getMailServerPassword(MailPullerWorker.java:248)Run the SQL query below and check if you see 2 rows associated to the Mail Puller job, one with the "S" outcome, and one with the "A" outcome (refer to the section Example of "unhealthy job" situation 1 for more information on how to identify it):

select * from rundetails where job_id in ('com.atlassian.jira.internal.mail.services.MailPullerJobRunner', 'com.atlassian.jira.internal.mail.services.MailProcessorJobRunner');

Solution

Since the bug has not been fixed at the time this article was written, the only workaround consists in re-starting all the Jira nodes to reset the Mail Puller job.

Root Cause 7 - The mail processor job got stuck while performing a regex pattern matching operation

Explanation

Whenever the Mail Processor processes a new email, it checks if this email relates to an existing JSM request as explained in the Issue/comment creation of the Jira Mail Handler documentation. If the Mail Processor detects that this email relates to an existing JSM issue, it will try to remove the old threads in the email and extract the new message, and then add it as a comment to the JSM ticket. To remove the old threads, the Mail Processor needs to perform some regex matching operation based on various patterns.

We found that, in some rare cases, this process of performing the regex pattern matching might put the Mail Processor job in a stuck state.

This behavior is tracked in the bug ticket: JSDSERVER-13967 - Jira Service Management Mail Process can get stuck processing the same email . A fix is available in JSM 5.13.0, 4.20.30, 5.4.15, 5.12.2 and later

Diagnosis

To check if this root cause might relevant:

Collect thread dumps while the issue is happening, and check if you see long running Caesium thread with the following stack trace (take not of the java.util.regex.Pattern class):

"Caesium-1-3" daemon prio=5 tid=0x00000000000002d0 nid=0 runnable java.lang.Thread.State: RUNNABLE at java.util.regex.Pattern$CharProperty.match(Pattern.java:3790) at java.util.regex.Pattern$Curly.match0(Pattern.java:4274) at java.util.regex.Pattern$Curly.match(Pattern.java:4248) at java.util.regex.Pattern$GroupHead.match(Pattern.java:4672) at java.util.regex.Pattern$Loop.matchInit(Pattern.java:4818) at java.util.regex.Pattern$Prolog.match(Pattern.java:4755) at java.util.regex.Pattern$Curly.match0(Pattern.java:4286) at java.util.regex.Pattern$Curly.match(Pattern.java:4248) at java.util.regex.Pattern$Curly.match0(Pattern.java:4286) at java.util.regex.Pattern$Curly.match(Pattern.java:4248) at java.util.regex.Pattern$Curly.match0(Pattern.java:4293) at java.util.regex.Pattern$Curly.match(Pattern.java:4248) at java.util.regex.Pattern$Begin.match(Pattern.java:3539) at java.util.regex.Matcher.search(Matcher.java:1248) at java.util.regex.Matcher.find(Matcher.java:637) at com.atlassian.pocketknife.internal.emailreply.matcher.basic.YahooSmbWroteOnDateBlockMatcher.isQuotedEmail(YahooSmbWroteOnDateBlockMatcher.java:25) at com.atlassian.pocketknife.internal.emailreply.matcher.basic.DelegatingQuotedEmailMatcher.isQuotedEmail(DelegatingQuotedEmailMatcher.java:15) ... at com.atlassian.pocketknife.internal.querydsl.DatabaseAccessorImpl.runInNewTransaction(DatabaseAccessorImpl.java:38) at com.atlassian.jira.internal.mail.processor.feature.processor.MailProcessorWorker.process(MailProcessorWorker.java:93) at com.atlassian.jira.internal.mail.processor.feature.processor.MailProcessorWorker.processAllValidMailChannels(MailProcessorWorker.java:143) at com.atlassian.jira.internal.mail.processor.feature.processor.MailProcessorService.run(MailProcessorService.java:25) at com.atlassian.jira.internal.mail.processor.services.MailProcessorExecutor.run(MailProcessorExecutor.java:29) at com.atlassian.jira.internal.mail.processor.services.AbstractMailExecutor.execute(AbstractMailExecutor.java:45) at com.atlassian.jira.internal.mail.processor.services.MailJobRunner.runJob(MailJobRunner.java:35) ...Run the SQL query below and check if you see 2 rows associated to the Mail Puller job, one with the "S" outcome, and one with the "A" outcome (refer to the section Example of "unhealthy job" situation 1 for more information on how to identify it):

select * from rundetails where job_id in ('com.atlassian.jira.internal.mail.services.MailPullerJobRunner', 'com.atlassian.jira.internal.mail.services.MailProcessorJobRunner');

Solution

Note that this bug has not been fixed at the time this article was written.

To fix the issue, please follow the steps described in the workaround section of the bug.

Providing data to Atlassian Support

If you were not able to identify what is preventing the JSM Mail Handler from processing new incoming mails, please reach out to Atlassian Support via this link.

To help the Atlassian support team investigate the issue faster, you can follow the steps below and attach all the collected data to the support ticket raised with Atlassian:

- Enable the DEBUG package related to the JSM Mail Handler by following the steps below:

- Go to the page ⚙ > System > Logging and Profiling

- Click on Configure logging level for another package

- Use com.atlassian.mail.incoming as the package name, and select DEBUG for the "Logging Level"

- Wait for about 30 min, so that we can collect enough logs

- Generate a support zip by making sure to tick the option Thread Dumps when you generate it.

To include the thread dumps when creating the support zip, go to ⚙ > System > Troubleshooting and support tools > Create Support zip > Customize Zip, tick the Thread dumps option, and click on the Save button

If you are using Jira Data Center, please generate a support zip from each Jira node

Run the following SQL Query against the Jira Database:

select * from rundetails where job_id in ('com.atlassian.jira.internal.mail.services.MailPullerJobRunner', 'com.atlassian.jira.internal.mail.services.MailProcessorJobRunner');- Take screenshots showing how the JSM Mail Handlers are configured in each JSM project (from the page Project Settings > Email Requests)

- Attach to the support ticket:

- The support zip (or the support zips from all the nodes, in case of a cluster of nodes)

- The result from the SQL query

- The screenshots