Bamboo Server fails to Initialize Plans on Server Restart

Symptoms

2011-10-12 11:16:16,966 FATAL [main] [BambooContainer] Cannot start bamboo

java.lang.ArrayIndexOutOfBoundsException: -1

at java.util.ArrayList.set(ArrayList.java:339)

at net.sf.hibernate.collection.List.readFrom(List.java:312)

at net.sf.hibernate.loader.Loader.readCollectionElement(Loader.java:384)

at net.sf.hibernate.loader.Loader.getRowFromResultSet(Loader.java:240)

......

......

at $Proxy11.getAllPlans(Unknown Source)

at com.atlassian.bamboo.container.BambooContainer.initialisePlans(BambooContainer.java:332)

at com.atlassian.bamboo.container.BambooContainer.start(BambooContainer.java:243)

OR

2013-01-04 14:28:04,855 FATAL [main] [BambooContainer] Cannot start bamboo

com.google.common.util.concurrent.UncheckedExecutionException: java.lang.NullPointerException

at com.google.common.cache.CustomConcurrentHashMap$ComputedUncheckedException.get(CustomConcurrentHashMap.java:3305)

at com.google.common.cache.CustomConcurrentHashMap$ComputingValueReference.compute(CustomConcurrentHashMap.java:3441)

at com.google.common.cache.CustomConcurrentHashMap$Segment.compute(CustomConcurrentHashMap.java:2322)

at com.google.common.cache.CustomConcurrentHashMap$Segment.getOrCompute(CustomConcurrentHashMap.java:2291)

at com.google.common.cache.CustomConcurrentHashMap.getOrCompute(CustomConcurrentHashMap.java:3802)

......

Caused by: java.lang.NullPointerException

at com.atlassian.bamboo.deletion.NotDeletedPredicate.apply(NotDeletedPredicate.java:27)

at com.atlassian.bamboo.deletion.NotDeletedPredicate.apply(NotDeletedPredicate.java:8)

at com.google.common.collect.Iterators$7.computeNext(Iterators.java:645)

at com.google.common.collect.AbstractIterator.tryToComputeNext(AbstractIterator.java:141)

at com.google.common.collect.AbstractIterator.hasNext(AbstractIterator.java:136)Cause

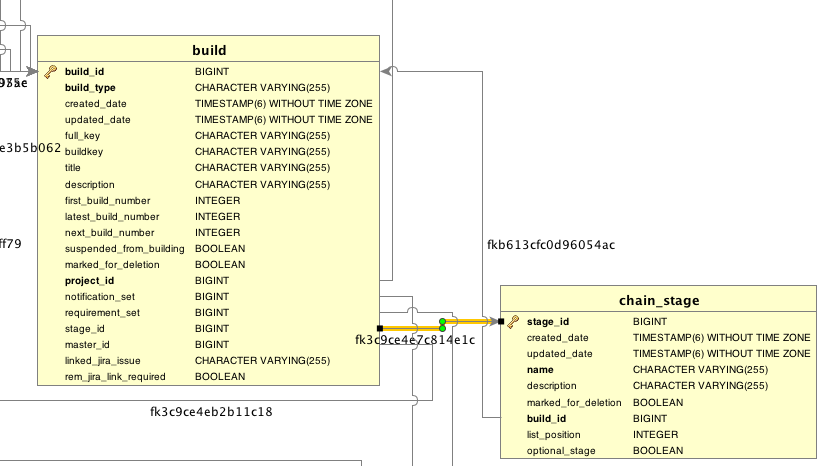

This is caused due to corruption in the Bamboo database, the LIST_POSITION column of CHAIN_STAGE table which stores the list order of Bamboo jobs is corrupted and has a value of -1. Another possible corruption of LIST_POSITION column is when there are duplicate LIST_POSITION values for the same BUILD_ID value in CHAIN_STAGE table.

Resolution

1. To check if you're affected by this bug execute the following command:

select BUILD_ID from CHAIN_STAGE where LIST_POSITION= -1;If the output returns any results, execute the following SQL;

select * from CHAIN_STAGE where BUILD_ID=<VALUE>;

STAGE_ID CREATED_D UPDATED_D NAME DESCRIPTION BUILD_ID LIST_POSITION MARKED_FOR_DELETION OPTIONAL_STAGE

---------- --------- --------- -------------------- ------------------------------------------------------- ---------- ------------- ------------------- --------------

71401503 Smoke Test JOB_2 58097680 -1 0 0

61538457 Build JOB_0 58097680 0 0 0

71401504 Release JOB_1 58097680 1 0 0

In the example above JOB_2 ha sa LIST_POSITION of -1, instead of 2.

2. Another example of this corruption is when there are duplicate LIST_POSITIONS for the same BUILD_ID. For instance, this listing looks fine:

because for the same BUILD_ID there are no duplicate LIST_POSITION values. If there were LIST_POSITION 3 and another 3 instead of 2 and 3 for the same BUILD_ID 7176214, then that would be a corruption. The easiest way to find out this type of corruptions is to enable detailed SQL logging, reproduce the problem, and see where exactly Bamboo fails. Based on the queries that Bamboo tries to run right before failing, we can identify the problematic plan. Next, we can check the related details in the CHAIN_STAGE table.

3. The final step is to find the problematic List Position for your build and execute the SQL below:

update CHAIN_STAGE set LIST_POSITION = <VALUE> where STAGE_ID = <VALUE>;