Improving instance stability with rate limiting

When automated integrations or scripts send requests to Confluence in huge bursts, it can affect Confluence’s stability, leading to drops in performance or even downtime. With rate limiting, you can control how many external REST API requests that automations and users can make and how often they can make them, making sure that your Confluence instance remains stable.

Rate limiting is available for Confluence Data Center.

How rate limiting works

Here’s some details about how rate limiting works in Confluence.

How to turn on rate limiting

You need the System Administrator global permission to turn on rate limiting.

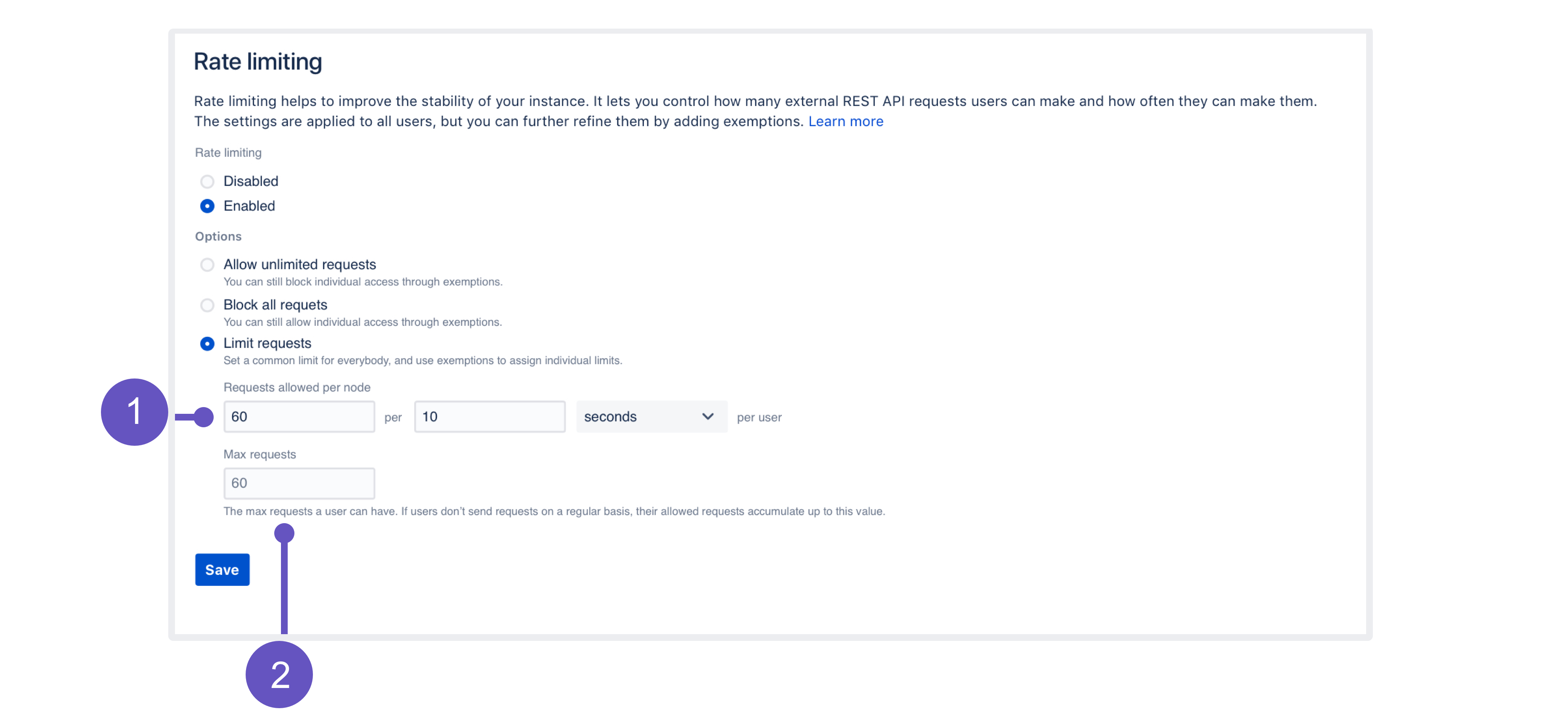

To turn on rate limiting:

- In Confluence, go to Administration > General Configuration > Rate limiting.

- Change the status to Enabled.

- Select one of the options: Allow unlimited requests, Block all requests, or Limit requests. The first and second are all about allowlisting and blocklisting. For the last option, you’ll need to enter actual limits. You can read more about them below.

- Save your changes.

Make sure to add exemptions for users who really need those extra requests, especially if you’ve chosen allowlisting or blocklisting. See Adding exemptions.

Limiting requests — what it’s all about

As much as allowlisting and blocklisting shouldn’t require additional explanation, you’ll probably be using the Limit requests option quite often, either as a global setting or in exemptions.

Let’s have a closer look at this option and how it works:

- Requests allowed: Every user is allowed a certain amount of requests in a chosen time interval. It can be 10 requests every second, 100 requests every hour, or any other configuration you choose.

- Max requests (advanced): Allowed requests, if not sent frequently, can be accumulated up to a set maximum per user. This option allows users to make requests at a different frequency than their usual rate (for example, 20 every 2 minutes instead of 10 every 1 minute, as specified in their rate), or accumulate more requests over time and send them in a single burst, if that’s what they need. Too advanced? Just make it equal to Requests allowed, and forget about this field — nothing more will be accumulated.

Examples

Finding the right limit

Adding exemptions

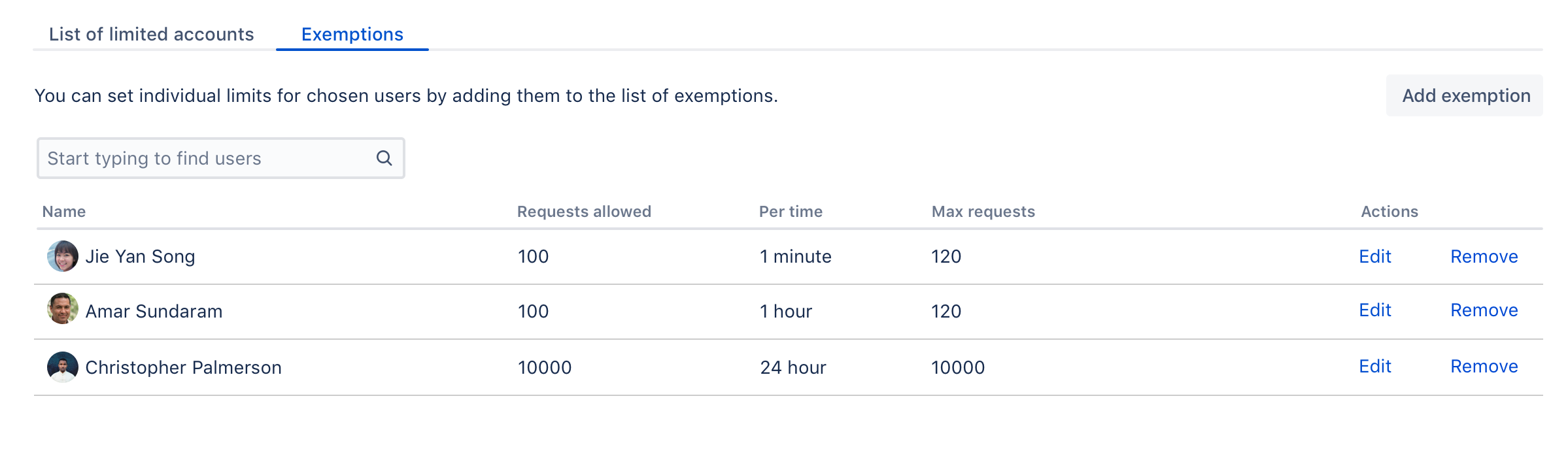

Exemptions are, well, special limits for users who really need to make more requests than others. Any exemptions you choose will take precedence over global settings.

After adding or editing an exemption, you’ll see the changes right away, but it takes up to 1 minute to apply the new settings to a user.

To add an exemption:

- Go to the Exemptions tab.

- Select Add exemption.

- Find the user and choose their new settings.

You can’t choose groups, but you can select multiple users. - The options available here are just the same as in global settings: Allow unlimited requests, Block all requests, or Assign custom limit.

- Save your changes.

If you want to edit an exemption later, just click Edit next to a user’s name in the Exemptions tab.

Recommended: Add an exemption for anonymous access

Confluence sees all anonymous traffic as made by one user: Anonymous. If your site is public, and your rate limits are not too high, a single person may drain the limit assigned to anonymous. It’s a good idea to add an exemption for this account with a higher limit, and then observe whether you need to increase it further.

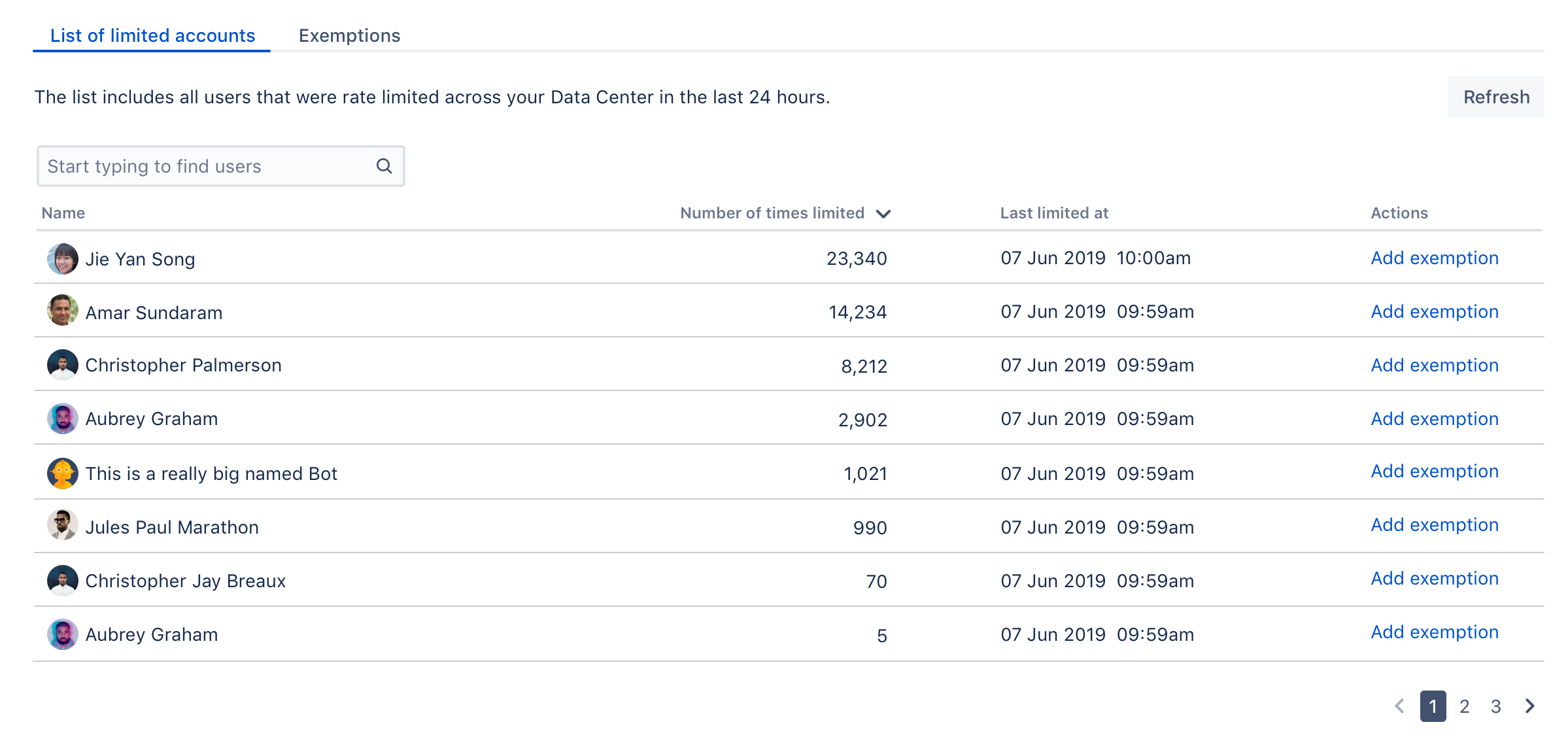

Identifying users who have been rate limited

When a user is rate-limited, they’ll know immediately as they’ll receive an HTTP 429 error message (too many requests). You can identify users that have been rate-limited by opening the List of limited accounts tab on the rate limiting settings page. The list shows all users from the whole cluster.

When a user is rate-limited, it takes up to 5 minutes to show it in the table.

Unusual accounts

You’ll recognize the users shown on the list by their name. It might happen, though, that the list will show some unusual accounts, so here’s what they mean:

- Unknown: That’s a user that has been deleted in Confluence. They shouldn’t appear on the list for more than 24 hours (as they can’t be rate limited anymore), but you might see them in the list of exemptions. Just delete any settings for them, they don’t need rate limiting anymore.

- Anonymous: This entry gathers all requests that weren’t made from an authenticated account. Since one user can easily use the limit for anonymous access, it might be a good idea to add an exemption for anonymous traffic and give it a higher limit.

Viewing limited requests in the Confluence log file

You can also view information about rate-limited users and requests in the Confluence log file. This is useful if you want to get more details about the URLs that requests targeted or originated from.

When a request has been rate-limited you’ll see a log entry similar to this one:

2019-12-24 10:18:23,265 WARN [http-nio-8090-exec-7] [ratelimiting.internal.filter.RateLimitFilter] lambda$userHasBeenRateLimited$0 User [2c9d88986ee7cdaa016ee7d40bd20002] has been rate limited

-- url: /rest/api/space/DS/content | traceId: 30c0edcb94620c83 | userName: exampleuserGetting rate limited — user’s perspective

When users make authenticated requests, they’ll see rate limiting headers in the response. These headers are added to every response, not just when you’re rate-limited.

Header | Description |

|---|---|

X-RateLimit-Limit | The max number of requests (tokens) you can have. New tokens won’t be added to your bucket after reaching this limit. Your admin configures this as Max requests. |

X-RateLimit-Remaining | The remaining number of tokens. This value is as accurate as it can be at the time of making a request, but it might not always be correct. |

X-RateLimit-Interval-Seconds | The time interval in seconds. You get a batch of new tokens every time interval. |

X-RateLimit-FillRate | The number of tokens you get every time interval. Your admin configures this as Requests allowed. |

retry-after | How long you need to wait until you get new tokens. If you still have tokens left, it shows 0; this means you can make more requests right away. |

When you’re rate-limited and your request doesn’t go through, you’ll see the HTTP 429 error message (too many requests). You can use these headers to adjust scripts and automation to your limits, making them send requests at a reasonable frequency.

Other tasks

Allowlisting URLs and resources

We’ve also added a way to allow whole URLs and resources on your Confluence instance using a system property. This should be used as quick fix for something that gets rate-limited, but it shouldn’t.

To allow specific URLs to be excluded from rate limiting:

- Stop Confluence.

Add the

com.atlassian.ratelimiting.whitelisted-url-patternssystem property, and set the value to a comma-separated list of URLs, for example:-Dcom.atlassian.ratelimiting.whitelisted-url-patterns=/**/rest/applinks/**,/**/rest/capabilities,/**/rest/someapi

The way you add system properties depends on how you run Confluence. See Configuring System Properties for more information.- Restart Confluence.

For more info on how to create URL patterns, see AntPathMatcher: URL patterns.

Allowlisting external applications

You can also allowlist consumer keys, which lets you remove rate limits for external applications integrated through AppLinks.

If you're integrating Confluence with other Atlassian products, you don't have to allowlist them as this traffic isn't limited.

Find the consumer key of your application.

Go to Administration > General Configuration > Application Links.

Find your application, and select Edit.

Copy the Consumer Key from Incoming Authentication.

Allowlist the consumer key.

Stop Confluence.

Add the

com.atlassian.ratelimiting.whitelisted-oauth-consumerssystem property, and set the value to a comma-separated list of consumer keys, for example:-Dcom.atlassian.ratelimiting.whitelisted-oauth-consumers=app-connector-for-confluence-serverThe way you add system properties depends on how you run Confluence. See Configuring System Properties for more information.

Restart Confluence.

After entering the consumer key, the traffic coming from the related application will no longer be limited.

Adjusting your code for rate limiting

We’ve created a set of strategies you can apply in your code (scripts, integrations, apps) so it works with rate limits, whatever they are.

For more info, see Adjusting your code for rate limiting.