Interpreting infrastructure metrics for in-product diagnostics

Summary

Infrastructure metrics for JMX monitoring and in-product diagnostic are used in Jira Software Data Center and Jira Service Management Data Center. These metrics help you monitor the health and performance of your instance infrastructure:

- outgoing mail server connection

- incoming mail server connection

- external user directories connectivity

- shared home write latency

- local home write latency

- internode latency

Learn more about other Jira-specific metrics for in-product diagnostics

Solution

Outgoing mail server connection state

The connection state metric for the outgoing mail server (SMTP) is created once it’s configured and removed when it’s deleted.

mail.outgoing.connection.state

mail.outgoing.connection.state.custom attempts to connect to a mail server and pings it with the NOOP or RSET SMTP commands. This operation is performed once in a minute. The metric reports failed state when a connection can’t be established or a response for commands is invalid. During the measurement process, the mail server will timeout after 10 seconds and report the disconnected value.

Available custom metric values: connected (true or false), totalFailures (the sum of the false values since the restart).

| Warning markers | Signs of healthiness |

|---|---|

For connected, Each false value indicates that Jira couldn't establish a connection with the mail server or didn’t get the expected response when pinging the server. For totalFailures, the constantly growing number of totalFailures means that this is most likely a permanent issue that needs your attention. Example: connected: false totalFailures: 23 | For connected, the true value indicates that a connection could be established and the server response for the ping was OK. totalFailures is stable and ideally equals 0. Example: connected: true totalFailures: 0 |

Incoming mail server connection state

The connection state metric for each incoming mail server is created once it’s added to Jira. Metrics are compatible with all types of mail servers and all authentication types.

mail.incoming.connection.state

mail.incoming.connection.state.custom attempts to connect to a remote server and perform a read-only open the INBOX folder operation. This operation is performed once in a minute.

Only one measuring process can run at a time to avoid overwriting results. Since the mail servers are remote and can have an individually defined connection timeout, during the measurement process, the timeouts are overridden to the standard maximum of 10 seconds.

For servers using basic authentication, the attempt to open a connection will fail if there is a problem. For servers using OAuth, a bad connection is only visible when trying to open the Inbox folder.

When an incoming mail server is deleted, its metric is unregistered too. Incoming mail servers are differentiated by using their names stored as metric tags. When changing the names of mail servers, note that this will create new metrics.

A sample MBean ObjectName for this metric will look as follows: com.atlassian.jira:type=metrics,category00=mail,category01=incoming,category02=connection,category03=state,name=custom,tag.serverName=<mailName>.

The tag tag.serverName will contain the name of your configured incoming mail server, however, every space character will change to _. For example, the mail name Google mail box will change to Google_mail_box.

For OAuth, the authentication token is valid for about an hour. If there’s an issue with the authentication process or refreshing the token, you'll know about it once the currently active authentication token gets outdated.

Available custom metric values: connected (true or false), totalFailures.

| Warning markers | Signs of healthiness |

|---|---|

For connected, the false value indicates that Jira couldn't connect to the server or perform the open the INBOX folder operation due to connectivity or authorization issues. For totalFailures, the constantly growing number of totalFailures means that this is most likely a permanent issue that needs your attention. Example: connected: false totalFailures: 23 |

Example: connected: true totalFailures: 0 |

External user directories connectivity

Two factors for external user directories are measured:

Connection state

values –

trueorfalsetotalFailures– the total number of failures

Latency

value – the current value in milliseconds and statistics

These values are measured for every type of user directory except for the internal user directory. This operation is performed once in a minute. As a key to differentiating user directories metrics, their names stored as metric tags are used. If you change the name of a user directory, a new metric will be created. Swapping names between user directories will adversely affect the readability of metrics.

Disabling or removing user directory will immediately remove its metrics from JMX.

Let’s assume you have two user directories: Example_UD_1 and Example_UD_2.

Example_UD_1 has an average latency of around 20 ms. Example_UD_2 has a latency of around 120 ms. Once you swap their names, from the metrics' perspective, you'll see that the latencies of Example_UD_2 have become significantly slower, while the latencies of Example_UD_1 have become higher.

We don't recommend swapping the names of user directories if this isn't necessary.

The connection between Jira and the LDAP or Crowd user directories is tracked through a special health check. Learn more about it and how to interpret its results

user.directory.connection.latency

user.directory.connection.latency is measured by performing user search on an uncached user directory. The query for users doesn’t specify any parameters or restrictions and the maximum result number is specified to one.

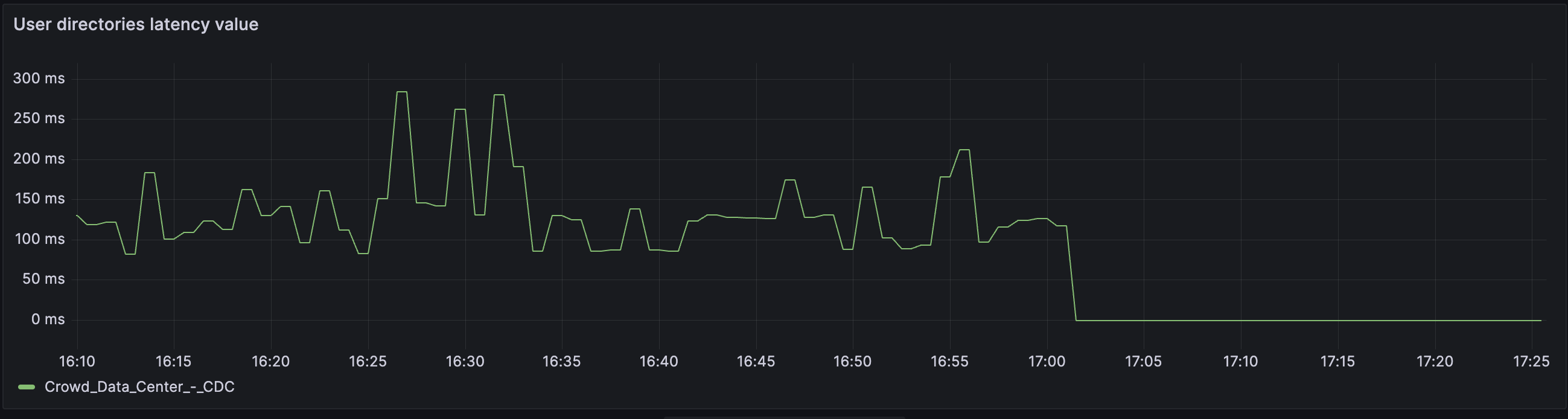

Check the chart with the user.directory.connection.latency.value metric dropping to the -1 value.

Available metrics: value, statistics.

| Warning markers | Signs of healthiness |

|---|---|

value Any occurrence of -1, which means that Jira couldn't connect to the machine or perform the search Other markers: issues with a user directory, authentication, etc. statistics The 50th percentile of the statistics is growing over time. This means that the average response time is higher than usual and users might experience some delay when trying to authenticate or get authorization. Example: value: -150thPercentile: 2450 99thPercentile: 7624 max: 14845 ... | value There are no occurrences of -1. Low latency to the server. statistics The 50th percentile is stable with a reasonable amount of latency. It can differ based on where your UD is located. Example: value: 37550thPercentile: 348 99thPercentile: 597 max: 673 ... |

user.directory.connection.state.custom

user.directory.connection.state.custom performs the same check as the Test connection configuration under Administration > System > User directories. The metric is checked differently depending on the type of the user directory:

LDAP – checks the connection with the machine (connectivity only).

Internal with LDAP Authentication – checks connection with the machine (connectivity only).

Active directory – searches users (connectivity and authentication).

Crowd – searches users (connectivity and authentication).

Check the chart with a 2-hour outage on the external user directory.

Available custom metric values: connected (true or false), totalFailures (the sum of false values).

| Warning markers | Signs of healthiness |

|---|---|

For connected, depending on the type of user directory, the false value indicates that Jira couldn't connect to the machine or perform user search. Once the first option fails only for connectivity issues, the second one fails for authentication reasons. For totalFailures, the constantly growing number of totalFailures means that this is most likely a permanent issue that needs your attention. Example: connected: false totalFailures: 23 | For connected, the true value indicates that there are no issues with the connection between a user directory’s machine and Jira or that there are no issues with the connection and authentication. Example: connected: true totalFailures: 0 |

Shared home write latency

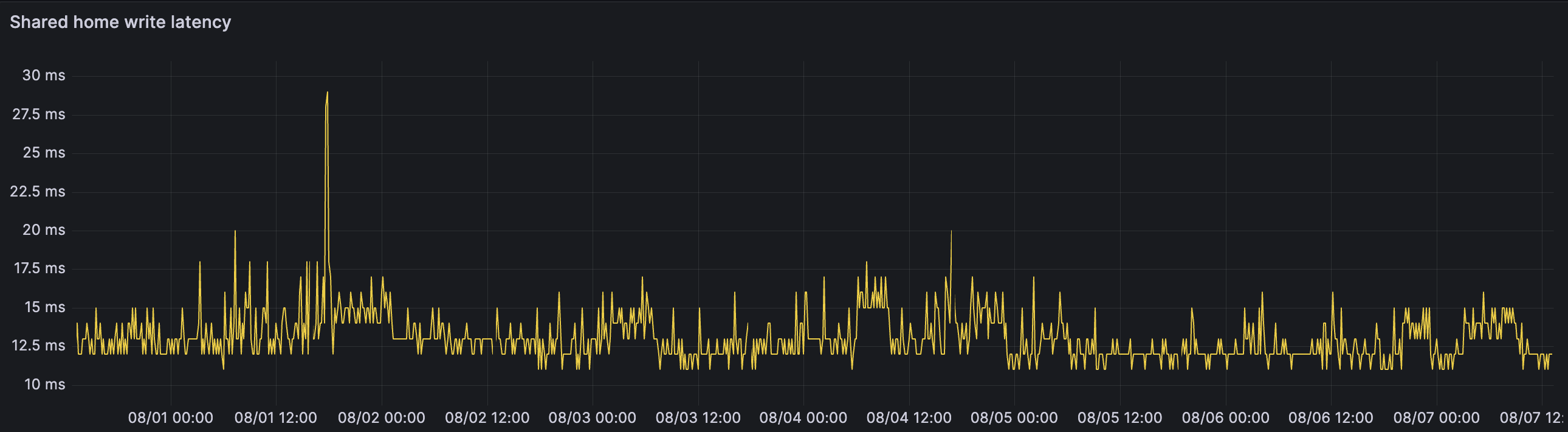

For clustered Data Center instances, the time to write sample data to the shared home is measured. High times of shared home latency will impact attachments, avatars, index snapshots, and other items.

home.shared.write.latency

home.shared.write.latencymeasures the time in milliseconds to write a sample file on the shared home. A few measurements are taken every minute to improve metric accuracy.home.shared.write.latency.valuecontains a calculated median latency from the last iteration.home.shared.write.latency.statisticscontains aggregated statistics from every individual measurement and should give better insight into the outliers and latency distribution.

Only one measuring process can run at a time. Whenever a timeout of 15 seconds per three file writes is breached, the .value metric will be updated with the -1 value. In this case, the shared home can be considered unreachable.

Check the chat with the healthy home.shared.write.latency.statistics metric.

Available metrics: value, statistics

| Warning markers | Signs of healthiness |

|---|---|

For value, the -1 value indicates that Jira couldn't write data on the shared home within five seconds approximately. For statistics, a latency higher than 100 ms will impact the node performance and user interactions with attachments. Example: value: -150thPercentile: 340 99thPercentile: 856 max: 1153 ... | For value, there are no occurrences of the -1 value. For statistics, a latency lower than 30 ms indicates that Jira can quickly persist data on the shared home. Example: value: 2350thPercentile: 22 99thPercentile: 31 max: 33 ... |

Local home write latency

Local home write latency metrics measure the local disk write performance: home.local.write.latency.synthetic and home.local.write.latency.indexwriter.

High local disk latency will have a significant impact on the Jira performance in cache replication and index persistence.

home.local.write.latency.synthetic

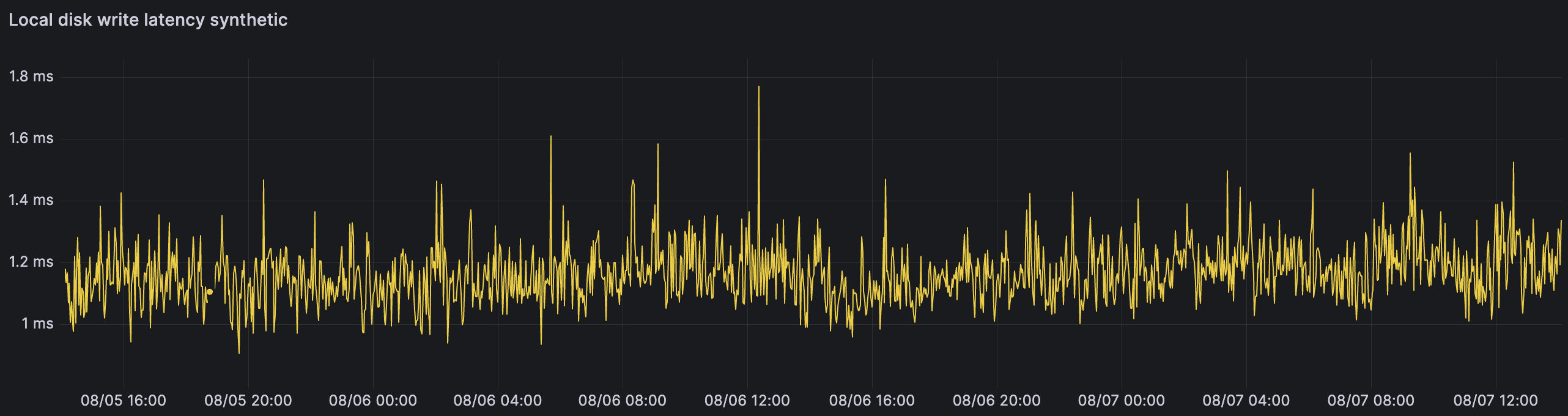

home.local.write.latency.syntheticmeasures the time of a synthetic file write operation with a guarantee of persistence on the local home. A few measurements are taken every minute to improve metric accuracy. This metric is designed to closely imitate the persisting of cache replication operations.home.local.write.latency.synthetic.valuecontains a calculated median latency from the last iteration. This value is reported in microseconds for better precision.home.local.write.latency.synthetic.statisticscontains aggregated statistics from every individual measurement and should give better insight into the outliers and the latency distribution.

If it takes more than five seconds to make seven writes, the local disk is considered unreachable. This should never happen, as the Jira instance would become unusable.

Check the chart with the healthy home.local.write.latency.synthetic.value metric with a latency lower than 2 ms.

Available metrics: value, statistics.

| Warning markers | Signs of healthiness |

|---|---|

For value, the -1 value indicates that Jira couldn't write data on the local disk. For statistics, a latency higher than 10 ms will impact a node's performance. It'll take more time to replicate changes across nodes and users may see stale data on a clustered instance. Example: value: -150thPercentile: 14.351 99thPercentile: 21.568 max: 67.916 ... | For value, there are no occurrences of the -1 value. For statistics, a latency lower than 10 ms indicates that Jira can quickly persist data on the local home. Example: value: 137450thPercentile: 1.374 99thPercentile: 2.351 max: 7.312 ... |

home.local.write.latency.indexwriter

home.local.write.latency.indexwriterreports the time of flushing the Lucene index buffer to the local disk. The metric is based on the real traffic and represents the current status and performance of the Lucene subsystem. This metric is updated only when the Lucene buffer is persisted, usually after index updates.home.local.write.latency.indexwriter.statisticscontains aggregated statistics from the measurements.

These metric values don’t reflect pure disk performance. The reported time is highly related to the volume of updated documents and may sporadically report high latency times unrelated to the disk performance.

Check the chart with the healthy home.local.write.latency.indexwriter.statistics metric.

Available metrics: statistics.

| Warning markers | Signs of healthiness |

|---|---|

For value, long-lasting periods of high latency. For statistics, if the reported latency is close to the configured Lucene commit interval (30 seconds by default), the process can’t catch up to the volume of updated documents. Example: value: 661350thPercentile: 6613 99thPercentile: 9851 max: 11849 ... | For value, a latency lower than 1 ms indicates that Jira can quickly persist index updated on the local disk. For statistics, spikes in the latency are infrequent. Example: value: 36150thPercentile: 361 99thPercentile: 957 max: 1842 ... |

Internode latency

For clustered Data Center instances, the latency of communication between nodes is measured with the metrics node.latency.statistics and node.connection.state.custom. The metrics are based on the real traffic and represents the current status and performance of the Cache replication subsystem. High internode latency will impact cache replication and DBR index replication.

The node.latency.statistics metric measures the time in milliseconds to send a cache invalidation message to other nodes through RMI. This can be interpreted as a basic internode communication latency. This metric will be unregistered from JMX when the connection status is set to disconnected, and the metric will appear again when the latency can be measured.

Check the chart with internode latency during the Jira redeployment to new machines.

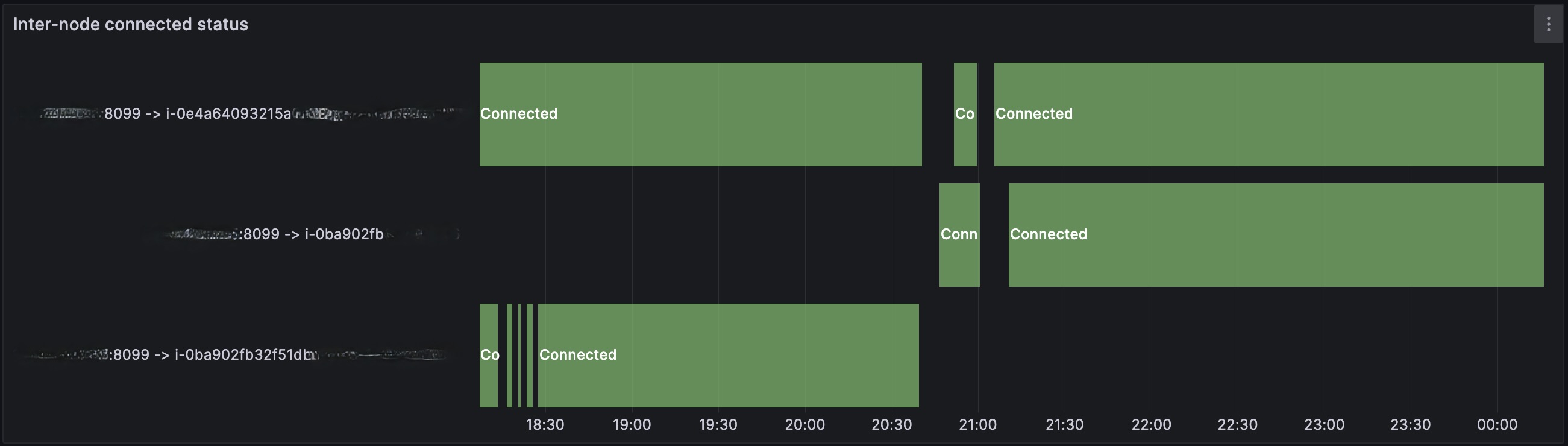

The node.connection.state.custom metric reports the current connection status to other nodes in the cluster. If the cache was successfully replicated, the status will be reported as connected. If the cache replication mechanism failed due to a network connection issue, the status will be reported as disconnected.

Check the chart with the internode node connection state during the Jira redeployment.

Both metrics are created for every other node in the cluster. The metrics include the tag tag.destNode=<nodeId>.

If a node is shut down properly, you can expect both metrics to disappear from the JMX registry. If the node is unexpectedly unreachable and Jira tries to replicate a cache operation to it, you can expect node.connection.state.custom to report as disconnected.

| Warning markers | Signs of healthiness |

|---|---|

For connected, the disconnected state for any node means the caches can’t be replicated. For node.latency.statistics, a latency higher than 10 ms will impact the node cache replication mechanism. Users may find inconsistent data on different nodes. Example: connected: falsetotalFailures: 647 50thPercentile: 47.31 99thPercentile: 85.51 max: 97.01 ... | All nodes are in the connected state. For node.latency.statistics, a latency lower than 10 ms indicates that Jira can quickly replicate changes between nodes. Example: connected: truetotalFailures: 3 50thPercentile: 2.34 99thPercentile: 8.43 max: 11.79 ... |