Live monitoring using the JMX interface

This article describes how to expose JMX MBeans within Jira for monitoring with a JMX client and how to use JMX MBeans for in-product diagnostics.

This guide provides a basic introduction to the JMX interface and is provided as is. Our support team can help you troubleshoot a specific Jira problem but can't help you set up your monitoring system or interpret the results.

This article describes how to expose JMX MBeans within Jira for monitoring with a JMX client and how to use JMX MBeans for in-product diagnostics.

What is JMX?

JMX (Java Management Extensions) is a technology for monitoring and managing Java applications. JMX uses objects called MBeans (Managed Beans) to expose data and resources from your application. For large instances of Jira Server or Jira Data Center, enabling JMX allows you to more easily monitor the consumption of application resources and diagnose performance issues related to indexing. This enables you to make better decisions about how to maintain and optimize machine resources.

Metrics collected by Jira

The following table lists metrics (MBeans) that are collected by Jira. All of them are grouped in the com.atlassian.jira property.

| Metric | Description | Reset after restarting Jira |

|---|---|---|

| dashboard.view.count | The number of times all dashboards were viewed by users | Yes |

| entity.attachments.total | The number of attachments | N/A |

| entity.components.total | The number of components | N/A |

| entity.customfields.total | The number of custom fields | N/A |

| entity.filters.total | The number of filters | N/A |

| entity.groups.total | The number of user groups | N/A |

| entity.issues.total | The number of issues | N/A |

| entity.users.total | The number of users | N/A |

| entity.versions.total | The number of versions created | N/A |

| issue.assigned.count | The number of times issues were assigned or reassigned to users (counts each action) | Yes |

| issue.created.count | The number of issues that you created after starting your Jira instance | Yes |

| issue.link.count | The number of issue links created after starting your Jira instance | Yes |

| issue.search.count | The number of times you searched for issues | Yes |

| issue.updated.count | The number of times you updated issues (each update after adding or changing some information) | Yes |

| issue.worklogged.count | The number of times you logged work on issues | Yes |

| jira.license | The types of licenses you have, the number of active users, and the maximum number of users available for each license type | N/A |

| quicksearch.concurrent.search | The number of concurrent searches that are being performed in real-time by using the quick search. You can use it to determine whether the limit set for concurrent searches is sufficient or should be increased. | Yes |

| web.requests | The number of requests (invocation.count) and the total response time (total.elapsed.time) | Yes |

The following metrics have been added to Jira as of 8.1 and are exposed under com.atlassian.jira/metrics. All the following metrics will be reset after restarting Jira.

Metric path | Description |

|---|---|

comment | Metrics related to comment operations |

comment/Create | A comment being created |

comment/Delete | A comment being deleted |

comment/Update | A comment being updated |

indexing | Metrics related to issue, comment, worklog, and change indexing |

indexing/CreateChangeHistoryDocument | Index documents being created for Change History entities. Note that many Change History Documents can be created for every issue. |

indexing/CreateCommentDocument | Index documents being created for Comment entities |

indexing/CreateIssueDocument | Index documents being created for Issue entities |

indexing/IssueAddFieldIndexers | FieldIndexer modules enrich Issue documents as part of Index document creation. Plugins can register custom FieldIndexer modules. These metrics provide insight into how much time is spent in FieldIndexer and can be used to track down indexing performance issues caused by them. The metrics describe how much time was spent in all FieldIndexers combined per Issue document created. |

indexing/LuceneAddDocument | How much time was spent adding a document to the Lucene index |

indexing/LuceneDeleteDocument | How much time was spent deleting one or more documents matching a term from the Lucene index |

indexing/LuceneOptimize | Metrics about Lucene index optimization (triggered manually from Jira) |

indeding/LuceneUpdateDocument | How much time was spent adding a created document to the Lucene index |

indexing/ReplicationLatency | Replication latency is the time between an issue, comment, or worklog being indexed on the node where the change was made and the indexing operation being replayed on the current node |

indexing/WaitForLucene | Documents are written to the Lucene index asynchronously. This metric captures how much time Jira’s indexing thread spent waiting for Lucene to complete the write. |

indexing/issueAddSearchExtractors | EntitySearchExtractor enriches issue documents as part of Index document creation. Plugins can register custom EntitySearchExtractor modules. These metrics provide insight into how much time is spent in EntitySearchExtractor and can be used to track down indexing performance issues they cause. The metrics describe how much time was spent in all EntitySearchExtractors combined per issue document created. |

issue | Metrics about issue operations |

issue/Create | Issue being created |

issue/Delete | Issue being deleted |

issue/Index | An issue being added to the Lucene index. This covers issue document creation and adding the document to the index. |

issue/DeIndex | An issue being removed from the Lucene index |

issue/ReIndex | An issue being re-indexed as a result of issue updates. This covers: creating an issue document, deleting the old document from the index, and adding new documents to the index. |

issue/Update | An issue being updated |

Metrics collected by Assets in Jira Service Management

The following table lists metrics (MBeans) that are collected by Assets in Jira Service Management.

Metric | Description |

|---|---|

assets.objectindeximpl.objects_load_on_add_ms | How long to load objects when a message is received. This metric tells you how long it took to populate the index from the database. |

assets.objectindeximpl.missing_objects_reload_retry_count_on_add | The number of attempts to reload an object when adding the object to the database. The metric gives an indication on how many attempts it took to load the object from the database due to the delays in writing to the database. |

assets.objectindeximpl.missing_objects_count_on_add | The number of missing objects on the first attempt at adding them to the database. This metric indicates the number of missing objects. |

assets.objectindeximpl.missing_objects_reload_on_add_ms | The time in milliseconds that was spent trying to reload from the database when an object wasn’t available. This metric shows how much time was spent looping and waiting for the object to be available in the database. |

assets.objectindeximpl.objects_load_on_update_ms | The time in milliseconds to load updates for on the first attempt.

|

assets.objectindeximpl.missing_objects_reload_on_update_ms | The time in milliseconds that is spent waiting for updates to appear in the database.

|

assets.objectindeximpl.missing_objects_reload_retry_count_on_update | The number of attempts to reload an object on update until the right version was found. |

assets.objectindeximpl.missing_objects_reload_retry_count_on_update | The number of missing object updates that were not ready in the database when an update started. |

assets.objectindeximpl.objects_removal_ms | The time in milliseconds that indicates how long it took to remove objects from Assets.

|

assets.assetsbatchreplicationmessageworkqueuepoller.process_ms | The number of milliseconds that indicates how long to process a replication message. |

assets.assetsbatchreplicationmessageworkqueuepoller.number_of_create_failures | The number of failures in a message batch when an object is created. |

assets.assetsbatchreplicationmessageworkqueuepoller.number_of_update_failures | The number of failures in a message batch when an object is updated. |

assets.cachemessageworkqueuepoller.process_batch_size | The number of object changes that were batched together to be sent across the cluster. |

assets.cachemessageworkqueuepoller.wait_for_clearance_ms | The time in milliseconds that is spent waiting for database updates to be drained from the application or submitted to the database. |

assets.cachemessageworkqueuepoller.process_ms | The time in milliseconds that is the total duration of the process of batching and sending a replication message. |

assets.assetsbatchreplicationmessagereceiver.work_queue_size | The size of the work queue in the replication message receiver. |

assets.assetsbatchreplicationmessagereceiver.work_queue_gauge | The current size of the work queue in the replication message receiver |

assets.assetsobjectreplicationbatchmanager.work_queue_size | The work queue size in the batch manager collecting individual changes to batch and send across the cluster. |

assets.assetsobjectreplicationbatchmanager.work_queue_gauge | The current work queue size in the batch manager collecting individual changes to batch and send across the cluster |

assets.defaultassetsbatchmessagesender.send_message | The amount of time to dispatch the message and continue processing the next message. |

assets.insightcachereplicatorimpl.legacy_object_receiver_queue_size | The queue size on the legacy replication mechanism using cluster messages. |

assets.insightcachereplicatorimpl.object_replication_dispatch | The amount of time it takes to |

assets.insightcachereplicatorimpl.legacy_object_send_queue_size | The queue size after offering a message for legacy index replication using the cluster message cache.

|

assets.assetsreplicationretryqueuepoller.retry_queue_size | The size of the retry/failure queue. |

assets.assetsreplicationretryqueuepoller.create_retry_attempts | The number of attempts to get successful processing for creating a message. |

assets.assetsreplicationretryqueuepoller.update_retry_attempts | The number of attempts to get successful processing for updating a message. |

assets.assetsreplicationretryqueuepoller.replay_wait_time | The amount of waiting time that was added before retrying the update. This metric helps to see if processing is keeping up with the queue, or if updates are backlogged and being processed immediately. If there is no waiting time applied, the queue is not processed quickly enough. |

assets.assetsreplicationretryqueuepoller.dead_letter_queue_size | The number of items in the dead letter queue. |

assets.assetsreplicationretryqueuepoller.process_retry_excluding_wait_ms | The time in milliseconds that it takes to process the failures, excluding any wait time prior to processing. This metric is an indication of how long the batches of failures are taking to be processed. It will also help to see if more delays are being added in the |

assets.assetsreplicationretryqueuepoller.dead_letter_queue_gauge | The current number of messages in the dead letter queue. |

assets.assetsreplicationretryqueuepoller.retry_queue_gauge | The current number of messages in the retry queue. |

Monitoring Jira

Before you can monitor Jira, you should enable JMX monitoring and then use a JMX client to view the metrics.

Good to know

Viewing the metrics will always have some performance impact on Jira. We recommend that you don't refresh them more than once a second.

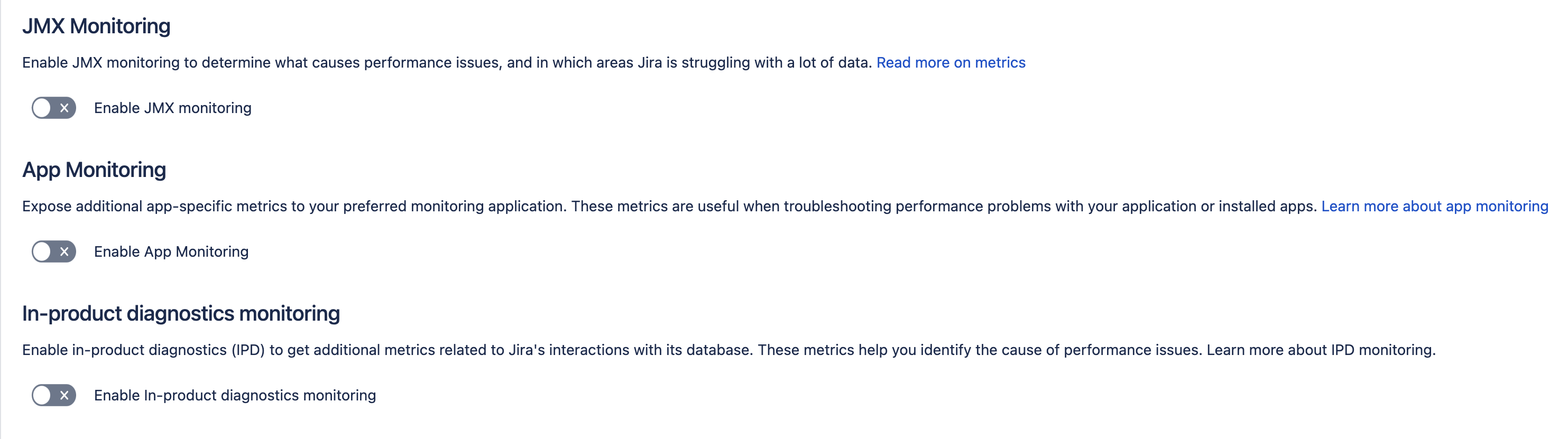

Enabling JMX monitoring in Jira

All of the metrics are collected by default, but you should enable JMX monitoring to expose them. You can do it in Jira but you must be a Jira admin.

- From the top navigation bar select Administration > System.

- Go to JMX monitoring.

- Toggle Enable JMX monitoring.

Monitoring with JConsole

After you enabled JMX monitoring, you can use any JMX client to view the metrics. To make it quick and easy, we've described how to view them by using JConsole. You can monitor your Jira instance either locally or remotely:

Monitoring Jira locally is good if you're troubleshooting a particular issue or only need to monitor Jira for a short time. Local monitoring can have a performance impact on your server, so it's not recommended for long-term monitoring of your production system.

Monitoring Jira remotely is recommended for production systems as it does not consume resources on your Jira server.

Known security issues

We’re providing a robust fix for a potential security vulnerability that may be caused by an RCE (remote code execution) JMX attack. During this attack, a remote user with valid credentials for JMX monitoring can execute arbitrary code on Jira Data Center via Java Deserialization, even if this user’s account is readOnly (montiorRole).

To prevent fabricated data from getting into the system through requests, we're using a blocklist deserialization filter based on ObjectInputFilter from JVM.

If you use a custom JDK and miss appropriate classes in your classpath based on the Java version, your Jira node won’t be started.

atlassian.jira.log will contain the following error: BlocklistDeserializationFilter has not been set up. It means that your Java environment has some security issues.

To eliminate the error and boost the security of your Jira instance, make sure your JDK contains the following classes:

For JDK 8: the class

sun.misc.ObjectInputFiltermust be enabled in the classpath.For JDK 11 and later: the class

java.io.ObjectInputFiltermust be enabled in the classpath.

In-product diagnostics available through JMX

Since Jira 9.3, we've introduced a set of database connectivity metrics for in-product diagnostics available through JMX.

In-product diagnostics (IPD) provides greater insights for you and our Support into how running instances are operating.

IPD uses additional metrics handling Jira’s interactions with its database. Using database connectivity metrics, you’ll efficiently identify what in your environment or infrastructure might cause the performance issues.

In Jira 9.5, in-product diagnostics has been complemented with more new metrics: HTTP connection metrics and mail queue metrics. In Jira 9.8, we've added a few new mail queue metrics allowing you to get a more detailed picture of mail queue contents and to collect more data for better performance monitoring.

The feature is disabled by default. Live metrics are available in the following formats:

- as new JMX MBeans

- as a history of snapshots of the JMX values in the new IPD log file

atlassian-jira-ipd-monitoring.log

The log file is available in the {jira_home}\log folder where you can find all the existing log files. The log file is also included in the Support Zip file, created in the ATST plugin. If needed, you can generate the Support Zip file in the Atlassian troubleshooting & support tools plugin and send the file to Atlassian Support, where we have internal tools to interpret it. Learn more about the plugin

Communication

The feature communicates in the following ways:

- JMX: JMX MBeans are updated periodically based on an internal schedule.

- The log file

atlassian-jira-ipd-monitoring.log: JMX values are snapshotted and recorded to the log file on a configurable schedule. By default, the JMX values are polled and written to the log file every 60 seconds. (This parameter is up to date since Jira 9.3 EAP 02.)

In-product diagnostics metrics

Expand the following sections to learn more about the metrics available for in-product diagnostics.

To use the metrics, make sure you’ve enabled JMX.

To find more details on cross-product metrics, check the article Interpreting cross-product metrics for in-product diagnostics.

To check the more detailed definitions of Jira-specific metrics, check the article Interpreting Jira-specific metrics for in-product diagnostics.

Enabling In-product diagnostics monitoring

IPD monitoring is enabled by default. To manage it:

- From the top navigation bar select Administration > System.

In the left-side panel, go to System Support and select Monitoring.

Use the In-product diagnostics monitoring toggle to enable or disable IPD monitoring.

The toggle is also available in version 9.4.3. Check Jira Software release notes for updates.

REST API

In Jira 9.5, we've introduced a new REST API endpoint for managing the IPD monitoring, specifically the In-product diagnostics monitoring toggle in the user interface: /rest/api/2/monitoring/ipd.

Log formatting

Writing to atlassian-jira-ipd-monitoring.log is done via log4j. Its configuration is managed in log4j.properties.

#####################################################

# In-product diagnostics monitoring logging

#####################################################

log4j.appender.ipd=com.atlassian.jira.logging.JiraHomeAppender

log4j.appender.ipd.File=atlassian-jira-ipd-monitoring.log

log4j.appender.ipd.MaxFileSize=20480KB

log4j.appender.ipd.MaxBackupIndex=5

log4j.appender.ipd.layout=com.atlassian.logging.log4j.NewLineIndentingFilteringPatternLayout

log4j.appender.ipd.layout.ConversionPattern=%d %m%n

log4j.logger.ipd-monitoring = INFO, filelog

log4j.additivity.ipd-monitoring = false

log4j.logger.ipd-monitoring-data-logger = INFO, ipd

log4j.additivity.ipd-monitoring-data-logger = falseLog contents

By default, a concise set of data is included in each log entry. An extended set of data can be logged by enabling the com.atlassian.jira.in.product.diagnostics.extended.logging feature flag.

To enable the extended data:

- Go to

<JIRA_URL>/secure/admin/SiteDarkFeatures!default.jspa, where<JIRA_URL>is the base URL of your Jira instance. - In the Enable dark feature text area, enter

com.atlassian.jira.in.product.diagnostics.extended.logging.enabled. Select Add. Learn how to manage dark features- To disable the extended data, in the Site Wide Dark Features panel, find

com.atlassian.jira.in.product.diagnostics.extended.logging.enabledand select Disable.

- To disable the extended data, in the Site Wide Dark Features panel, find

In the following tables, see the structures of the concise vs extended logging formats.

The metrics in JMX always go in the extended format.

Concise data

MBean Type | Properties | Attributes |

|---|---|---|

Counter | timestamp label attributes |

|

Value |

| |

Statistics |

|

Extended data

The metrics in JMX always go in the extended format.

MBean Type | Properties | Attributes |

|---|---|---|

Counter | timestamp label attributes objectName |

|

Value |

| |

Statistics |

|

Definitions of metric attributes

Expand the following sections to learn more about metric attributes.

Processing properties

JMX logging polling interval is set to 60 seconds and can't be modified.

Log file polling interval is set to 60 seconds and can be changed by using the system property

jira.diagnostics.ipdlog.poll.seconds.By default, the JMX values are polled and written to

atlassian-jira-ipd-monitoring.log.