Database Setup for Pgpool-II

Run and configure the Pgpool-II environment

For illustration in this document, we're going to use Docker images from Bitnami by VMware. According to the official Pgpool documentation, this approach has several benefits:

- Bitnami closely tracks upstream source changes and promptly publishes new versions of this image using our automated systems.

- With Bitnami images, the latest bug fixes and features are available as soon as possible.

First, you need to set up Postgres nodes. They must be accessible to one another. They can be a part of the same private subnet or be exposed to the Internet, though exposure to the Internet isn’t recommended.

Create a primary PostgreSQL node on a separate machine. Run the following command:

docker network create my-network --driver bridgeThe launch of the node will look as follows:

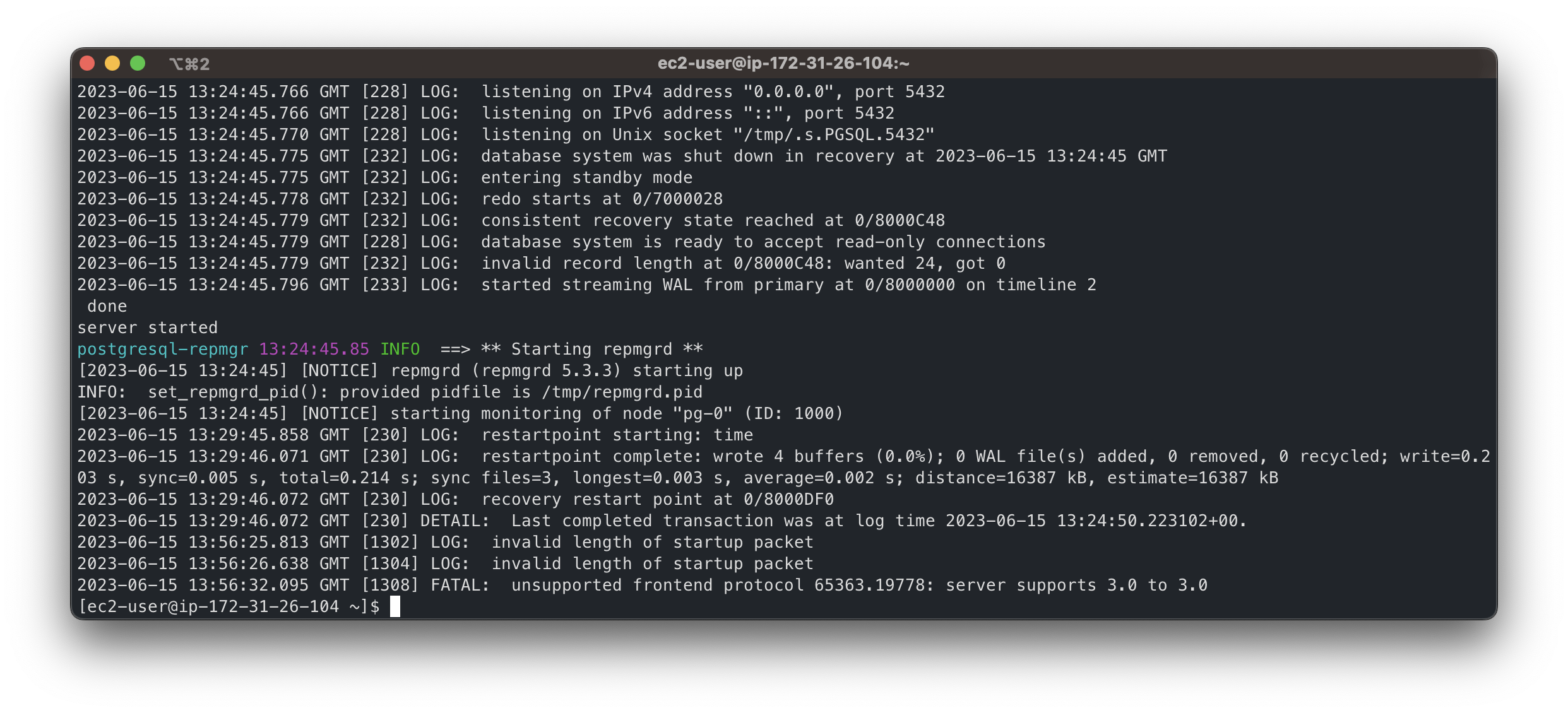

docker run --detach --rm --name pg-0 \ -p 5432:5432 \ --network my-network \ --env REPMGR_PARTNER_NODES={PG-0-IP},{PG-1-IP} \ --env REPMGR_NODE_NAME=pg-0 \ --env REPMGR_NODE_NETWORK_NAME={PG-0-IP} \ --env REPMGR_PRIMARY_HOST={PG-0-IP} \ --env REPMGR_PASSWORD=repmgrpass \ --env POSTGRESQL_POSTGRES_PASSWORD=adminpassword \ --env POSTGRESQL_USERNAME=customuser \ --env POSTGRESQL_PASSWORD=custompassword \ --env POSTGRESQL_DATABASE=customdatabase \ --env BITNAMI_DEBUG=true \ bitnami/postgresql-repmgr:latestThe message

[NOTICE] starting monitoring of node "pg-0" (ID: 1000)confirms the successful creation of the primary node.Create a standby node on a separate machine. Run the following command:

docker network create my-network --driver bridgeThe launch of the node will look as follows:

docker run --detach --rm --name pg-1 \ -p 5432:5432 \ --network my-network \ --env REPMGR_PARTNER_NODES={PG-0-IP},{PG-1-IP} \ --env REPMGR_NODE_NAME=pg-1 \ --env REPMGR_NODE_NETWORK_NAME={PG-1-IP} \ --env REPMGR_PRIMARY_HOST={PG-0-IP} \ --env REPMGR_PASSWORD=repmgrpass \ --env POSTGRESQL_POSTGRES_PASSWORD=adminpassword \ --env POSTGRESQL_USERNAME=customuser \ --env POSTGRESQL_PASSWORD=custompassword \ --env POSTGRESQL_DATABASE=customdatabase \ --env BITNAMI_DEBUG=true \ bitnami/postgresql-repmgr:latest- Replace

{PG-0-IP},{PG-1-IP}in the code sample with comma-separated IP addresses that can be used to access pg-0 and pg-1 nodes. For example,15.237.94.251,35.181.56.169. - To establish a mutual connection, the standby node tries to access the primary node right after starting.

- Replace

Create a Pgpool balancer middleware node with the reference to the other nodes. Run the following command:

docker network create my-network --driver bridgeThe launch of the node will look as follows:

docker run --detach --name pgpool --network my-network \ -p 5432:5432 \ --env PGPOOL_BACKEND_NODES=0:{PG-0-HOST},1:{PG-1-HOST} \ --env PGPOOL_SR_CHECK_USER=postgres \ --env PGPOOL_SR_CHECK_PASSWORD=adminpassword \ --env PGPOOL_ENABLE_LDAP=no \ --env PGPOOL_USERNAME=customuser \ --env PGPOOL_PASSWORD=custompassword \ --env PGPOOL_POSTGRES_USERNAME=postgres \ --env PGPOOL_POSTGRES_PASSWORD=adminpassword \ --env PGPOOL_ADMIN_USERNAME=admin \ --env PGPOOL_ADMIN_PASSWORD=adminpassword \ --env PGPOOL_AUTO_FAILBACK=yes \ --env PGPOOL_BACKEND_APPLICATION_NAMES=pg-0,pg-1 \ bitnami/pgpool:latest- Replace

{PG-0-HOST},{PG-1-HOST}in the code sample with the host addresses of the pg-0 and pg-1 nodes, including ports. For example,15.237.94.251:5432.

Learn more about the configuration of the Bitnami containers

- Replace

Now, you can use the

pgpoolcontainer as an entry point to the database cluster. To connect to thepgpoolcontainer, use the following command:psql -h {PGPOOL-HOST} -p 5432 -U postgres -d repmgrReplace

{PGPOOL-HOST}in the code sample with thepgpoolnode address. For example,34.227.66.69.

To confirm the successful deployment, access the tablerepmgr.nodesby using the following SQL query:SELECT * FROM repmgr.nodes;The output must show all the information about each node’s state:

To continue the configuration, use the guidelines from Database Setup for PostgreSQL. The steps are the same for creating a user and database, installing Confluence, and using the Confluence setup wizard.